AI Explainability: Building Trust in AI Systems with 3 Proven Methods

AI Explainability is crucial for building trust in AI systems; this article explores three proven methods to enhance transparency and understanding in AI.

In today’s world, artificial intelligence (AI) is increasingly integrated into various aspects of our lives. Understanding how these AI systems arrive at their decisions is no longer a luxury but a necessity. This is where **AI Explainability: 3 Proven Methods for Building Trust in Your AI Systems** comes into play, ensuring that AI systems are not only effective but also transparent and trustworthy.

Understanding the Importance of AI Explainability

AI explainability is the capability of an AI system to articulate its decision-making process in a manner that is easily understandable by humans. This transparency is vital for establishing trust and confidence in AI technologies.

Without explainability, AI systems can be perceived as “black boxes,” where the reasoning behind their outputs is opaque. This lack of transparency can lead to skepticism, especially in critical applications such as healthcare and finance.

Why is AI Explainability Essential?

AI explainability addresses several key concerns and offers numerous benefits. Here’s a brief overview:

- Building Trust: Transparency fosters trust among users, stakeholders, and regulators.

- Ensuring Accountability: Explainable AI allows for better monitoring and accountability of AI-driven decisions.

- Improving Performance: Understanding AI reasoning can help identify biases and improve model accuracy.

- Complying with Regulations: Many regulations, such as GDPR, require transparency in automated decision-making processes.

AI explainability is not merely a technical challenge but also an ethical and regulatory imperative. As AI systems become more prevalent, ensuring their transparency and trustworthiness will be essential.

Method 1: Rule-Based Systems

Rule-based systems offer a straightforward approach to AI explainability. These systems operate on predefined rules that dictate how decisions are made, providing a clear and understandable logic flow.

In a rule-based system, decisions are based on “if-then” statements. These rules are explicitly programmed, making it easy to trace the decision-making process back to its origin.

How Rule-Based Systems Work

Rule-based systems consist of three main components:

- Facts: The input data or information that the system uses to make decisions.

- Rules: A set of “if-then” statements that define the system’s logic.

- Inference Engine: The mechanism that applies the rules to the facts to reach a conclusion.

For example, in a medical diagnosis system, a rule might be, “If the patient has a fever and cough, then consider the possibility of influenza.” The inference engine would apply this rule based on the patient’s symptoms.

Advantages and Limitations

Rule-based systems offer several advantages:

- Transparency: The decision-making process is easy to understand and trace.

- Simplicity: These systems are relatively simple to implement and maintain.

- Predictability: The behavior of the system is predictable, as it follows predefined rules.

However, they also have limitations:

- Scalability: These systems can become complex and difficult to manage as the number of rules increases.

- Adaptability: Rule-based systems are not well-suited for handling uncertain or incomplete data.

- Expert Knowledge: Requires significant domain expertise to define the rules accurately.

Despite these limitations, rule-based systems remain a valuable tool for AI explainability, particularly in domains where clear and consistent rules can be defined.

Method 2: LIME (Local Interpretable Model-Agnostic Explanations)

LIME is a technique used to explain the predictions of any machine learning classifier by approximating it locally with an interpretable model.

LIME helps to understand which features are most important in influencing the prediction of a given data point. It does this by perturbing the input data and observing how the model’s predictions change.

How LIME Works

LIME follows these steps:

- Select a data point for explanation.

- Perturb the data point to create variations.

- Get predictions from the original model for these variations.

- Weight the variations based on their similarity to the original data point.

- Fit an interpretable model (e.g., linear model) to the weighted variations.

- Use the interpretable model to explain the prediction.

Example: Imagine using LIME to explain why a machine-learning model predicted that an image contains a cat. LIME might highlight the parts of the image (e.g. face, ears, tail) are most responsible for prediction of cat.

Benefits of Using LIME

- Model-Agnostic: It can be used with any machine learning model.

- Local Explanations: Focuses on explaining individual predictions, providing granular insights.

- Feature Importance: Identifies the most important features influencing the prediction.

Real-World Applications of LIME

LIME has been applied in various domains, including:

- Text Classification: Explaining why a document was classified as belonging to a specific category.

- Image Recognition: Highlighting the regions in an image that contributed to the classification result.

- Fraud Detection: Identifying the factors that led to a transaction being flagged as fraudulent.

With its ability to provide local, model-agnostic explanations, LIME helps break down complex AI systems into understandable components, thereby fostering trust and transparency.

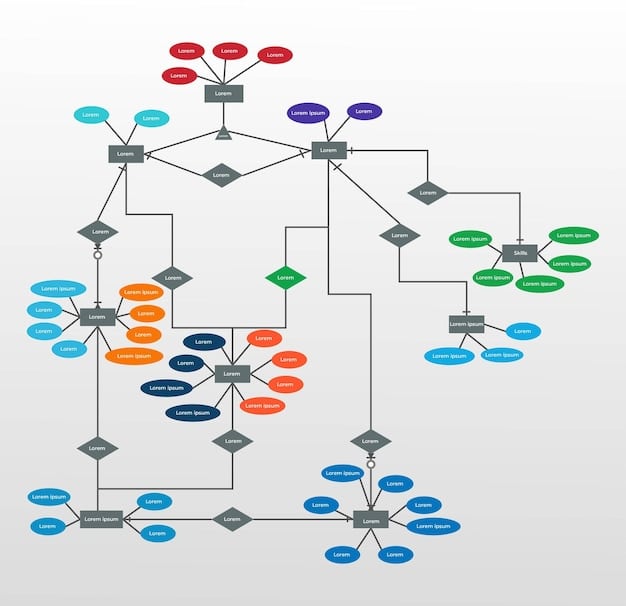

Method 3: SHAP (SHapley Additive exPlanations)

SHAP is another powerful method for explaining the output of machine learning models. It uses game-theoretic principles to assign each feature an importance value for a particular prediction.

The SHAP values represent the average marginal contribution of a feature across all possible coalitions. This provides a comprehensive view of each feature’s impact on the model’s output.

Understanding SHAP Values

SHAP values adhere to the following properties:

- Local Accuracy: The sum of the SHAP values equals the difference between the prediction and the average prediction.

- Missingness: Features that are missing have a SHAP value of zero.

- Consistency: If a feature has a greater impact on the prediction, it receives a higher SHAP value.

Applying SHAP in Practice

SHAP can be used to understand individual predictions or to gain insights into the overall behavior of the model.

SHAP provides global feature importance rankings of how each feature affects the model in general such as a SHAP summary plot.

Advantages of SHAP

SHAP offers several advantages over other explanation methods:

- Completeness: It considers all possible feature combinations.

- Fairness: It assigns feature importance in a fair and consistent manner.

- Global Insights: It can be used to understand the overall behavior of the model.

SHAP values can be used to identify biases, detect errors, and improve the overall performance of machine learning models. By providing a clear and quantitative measure of feature importance, SHAP helps build trust in AI systems.

Integrating AI Explainability into AI Ethics & Governance

Integrating AI explainability into AI ethics and governance is crucial for ensuring responsible AI development and deployment. By prioritising transparency and understanding, organisations can mitigate risks and build public trust.

AI ethics and governance encompasses a set of principles and practices aimed at ensuring that AI systems are developed and used in a way that is ethical, legal, and socially responsible. AI explainability plays a vital role in this context by providing a means to understand and scrutinise AI decision-making processes.

Key Considerations for Ethical AI Governance

- Transparency Standards: Establish clear standards for AI transparency and explainability.

- Bias Detection: Implement mechanisms for detecting and mitigating bias in AI systems.

- Accountability Frameworks: Develop frameworks for holding AI systems accountable for their decisions.

Best Practices for Implementing AI Explainability

- Select Appropriate Methods: Choose explainability methods that are appropriate for the type of AI model and the specific use case.

- Document Explanations: Document the explanations generated by AI systems for auditing and compliance purposes.

- Train Stakeholders: Provide training to stakeholders on how to interpret and use AI explanations.

Integrating AI explainability into AI ethics and governance frameworks is a key step towards building trustworthy AI systems. This approach enables organisations to proactively address ethical concerns and ensure that AI technologies are used for the benefit of society.

The Future of AI Explainability

As AI technologies continue to advance, the field of AI explainability will evolve to meet new challenges. Future research will focus on developing more sophisticated and scalable explanation methods.

Emerging trends in AI, such as deep learning and reinforcement learning, pose unique challenges for explainability. New methods are needed to understand the decision-making processes of these complex models.

Emerging Trends in AI Explainability

- Counterfactual Explanations: Focus on identifying the changes needed to alter a model’s prediction.

- Causal Explanations: Aim to understand the causal relationships between features and predictions.

- Interactive Explanations: Allow users to interact with the model to explore different scenarios and explanations.

The Role of AI Explainability in Building Trust

The future of AI explainability is closely linked to the broader goal of building trust in AI systems. As AI becomes more integrated into our lives, ensuring its transparency and accountability will be essential.

AI explainability is not just a technical challenge but also a social and ethical imperative. By prioritising transparency and understanding, we can ensure that AI technologies are used in a responsible and beneficial manner.

| Key Point | Brief Description |

|---|---|

| 🔑 Rule-Based Systems | Transparent AI with predefined ‘if-then’ rules. |

| 💡 LIME | Explains predictions by approximating models locally. |

| 📊 SHAP | Uses game theory for feature importance in predictions. |

| 🛡️ AI Governance | Ethical AI practices ensuring responsible development. |

FAQ

▼

AI Explainability refers to the ability to understand and articulate how an AI system makes decisions. This ensures transparency and trustworthiness in AI outcomes.

▼

It is important for building trust, ensuring accountability, improving performance, and complying with regulations. It helps in making AI systems more reliable.

▼

Rule-Based Systems use predefined ‘if-then’ statements, making the decision-making process transparent and easy to follow because the logic is clearly defined.

▼

LIME (Local Interpretable Model-Agnostic Explanations) and SHAP (SHapley Additive exPlanations) are methods used to explain the predictions of machine learning models, enhancing trust and transparency.

▼

Integrating AI Explainability involves establishing transparency standards, detecting bias, and developing accountability frameworks to ensure responsible AI development and usage.

Conclusion

In conclusion, enhancing **AI Explainability: 3 Proven Methods for Building Trust in Your AI Systems** is essential for creating transparent and trustworthy AI technologies. By implementing methods like rule-based systems, LIME, and SHAP, alongside robust AI ethics and governance frameworks, we can ensure that AI benefits society responsibly.