Explainable AI Research: Q1 2025 Breakthroughs in U.S. Transparency

The latest explainable AI research in Q1 2025 has yielded significant breakthroughs in the U.S., particularly in enhancing model transparency, interpretability, and building greater trust in complex AI systems across critical applications.

The imperative for transparent artificial intelligence has never been more critical, especially in the United States, where AI integration is accelerating across sensitive sectors. Understanding the ‘why’ behind AI decisions is paramount for trust, accountability, and ethical deployment. This article delves into explainable AI research from Q1 2025, highlighting three groundbreaking developments that are significantly impacting model transparency in the U.S.

The Rise of Causal Explainability Frameworks

The first quarter of 2025 has witnessed a significant leap in causal explainability frameworks within the U.S. AI research landscape. Traditionally, explainable AI (XAI) focused on identifying correlations, telling us ‘what’ features influenced a decision. However, the new generation of frameworks aims to uncover the ‘why’—the underlying causal relationships that drive AI model outcomes. This shift is crucial for high-stakes applications where mere correlation is insufficient for trust and effective intervention.

Researchers across leading U.S. universities and tech companies have developed sophisticated algorithms that can disentangle complex causal dependencies within black-box models. These frameworks move beyond simple feature importance scores, providing a deeper understanding of how specific inputs directly cause particular outputs, rather than just being associated with them. This advancement is particularly impactful in fields like healthcare and financial services, where regulatory scrutiny demands robust justifications for AI-driven decisions.

Advancing Beyond Correlation

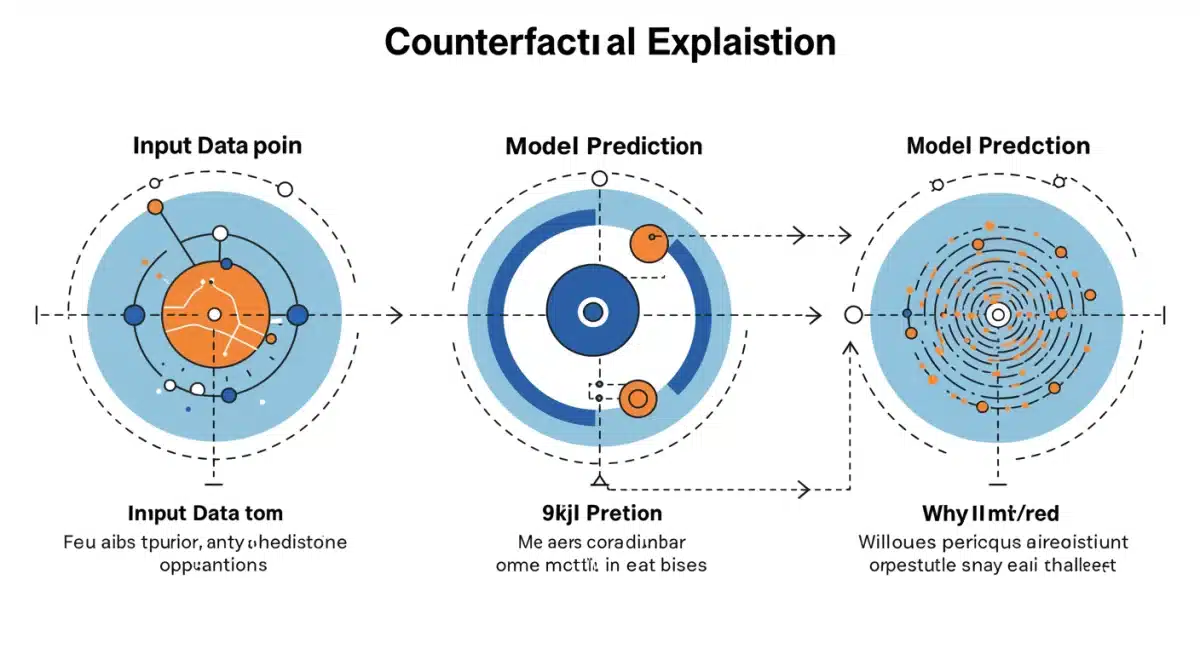

For years, XAI tools often provided post-hoc explanations that, while helpful, could sometimes be misleading by highlighting mere correlations. The new causal frameworks offer a more robust understanding by simulating interventions and observing their effects, much like a scientific experiment. This allows practitioners to understand not just what features are important, but how changing those features would causally alter the model’s prediction.

- Interventional Explanations: Simulating changes to input features to observe causal impact on output.

- Counterfactual Reasoning: Identifying the minimal change to an input that would alter a prediction to a desired outcome.

- Structural Causal Models: Leveraging graphical models to represent and infer causal relationships within AI systems.

The implications for model debugging and fairness are immense. By understanding causal pathways, developers can pinpoint and rectify biases that might be hidden within complex correlational structures. This provides a powerful tool for ensuring AI systems operate equitably and effectively.

In essence, these new causal explainability frameworks are setting a new standard for transparency, pushing the boundaries of what it means to truly understand an AI model in a U.S. regulatory and ethical context. They empower developers and users with the ability to interrogate models at a deeper, more fundamental level, fostering greater confidence in their deployment.

Breakthroughs in Real-time Explainability for Edge AI

The second major breakthrough in Q1 2025 pertains to real-time explainability, particularly for AI models deployed on edge devices. As AI decentralizes and moves closer to data sources, the challenge of providing immediate, understandable explanations without significant computational overhead has been substantial. New research originating from U.S. innovation hubs addresses this critical need, enabling transparent AI operations even in resource-constrained environments.

These innovations focus on developing lightweight explanation generators that can run concurrently with the inference process on edge devices. This means that instead of sending data back to a powerful central server for explanation generation, the explanations are produced locally, almost instantaneously. This is vital for applications requiring immediate decision-making and accountability, such as autonomous vehicles, smart manufacturing, and remote patient monitoring.

Optimized Explanation Algorithms

The key to these advancements lies in highly optimized algorithms and novel architectural designs that minimize computational resources while maximizing the clarity and fidelity of explanations. Researchers have explored methods like model distillation for explanation, where a complex explanation model is compressed into a simpler, more efficient one suitable for edge deployment. Another approach involves developing intrinsically interpretable models that are designed to be explainable from the ground up, rather than relying on post-hoc techniques.

- Lightweight LIME/SHAP Variants: Adapted versions of popular XAI methods optimized for lower computational footprints.

- Hardware-Accelerated Explanation: Leveraging specialized edge hardware (e.g., AI accelerators) to speed up explanation generation.

- Pre-computed Explanation Maps: Generating partial explanations offline that can be quickly referenced during real-time inference.

The ability to provide real-time explanations at the edge significantly enhances trust and debugging capabilities in critical scenarios. For instance, an autonomous vehicle’s AI could explain its braking decision in milliseconds, or a smart factory robot could justify a rejection of a component, allowing human operators to intervene effectively and safely. This level of transparency is transformative for the adoption of edge AI in regulated U.S. industries.

These innovations in real-time explainability are paving the way for a new era of transparent and accountable edge AI, ensuring that even the most distributed and responsive AI systems can be understood and trusted by users and regulators alike.

User-Centric XAI: Tailoring Explanations to Stakeholders

The third significant development in Q1 2025 in U.S. explainable AI research centers on user-centric XAI, focusing on tailoring explanations to the specific needs and understanding levels of different stakeholders. While technical explanations are crucial for AI developers, they are often incomprehensible to end-users, business leaders, or regulators. New frameworks are emerging that dynamically adapt the form and content of explanations, making AI transparency truly accessible to a broader audience.

This research acknowledges that a one-size-fits-all approach to explainability is ineffective. Instead, it leverages AI itself to generate explanations that are contextually relevant and presented in a language and format appropriate for the intended recipient. This could mean visual explanations for a non-technical user, detailed causal graphs for a data scientist, or high-level summaries for a CEO. This personalized approach to transparency is a game-changer for AI adoption and governance.

Dynamic Explanation Generation

Researchers are developing systems that incorporate user profiles and contextual information to select the most appropriate explanation strategy. This might involve natural language generation (NLG) to translate complex model logic into plain English, or interactive visualization tools that allow users to explore different facets of a decision at their own pace. The goal is to make AI systems not just explainable, but actively communicative and understandable.

- Persona-Based Explanations: Customizing explanation depth and format based on user roles (e.g., developer, clinician, manager).

- Interactive Explanation Interfaces: Tools allowing users to query ‘what-if’ scenarios and receive tailored responses from the AI.

- Multi-Modal Explanations: Combining text, visuals, and even audio to convey complex AI reasoning effectively.

The impact of user-centric XAI is profound for fostering trust and compliance. For instance, in healthcare, a doctor might receive a concise, patient-friendly explanation for an AI’s diagnostic recommendation, while a hospital administrator could see an aggregate report on the AI’s performance and fairness metrics. This tailored transparency ensures that every stakeholder can engage with AI on their own terms, leading to more informed decision-making and ethical deployment.

By shifting the focus from merely generating explanations to generating understandable and relevant explanations for diverse audiences, U.S. research is making significant strides in bridging the gap between AI’s technical complexity and its societal impact.

Regulatory Implications and Policy Response in the U.S.

These breakthroughs in explainable AI research are not occurring in a vacuum; they are deeply intertwined with the evolving regulatory landscape in the United States. As AI becomes more pervasive, government agencies and policymakers are increasingly demanding transparency and accountability from AI systems. The advancements in Q1 2025 directly address many of these growing concerns, providing practical tools for compliance and ethical governance.

For example, the push for causal explainability aligns with calls for stronger due diligence in AI development, ensuring that models are not just performing well, but are doing so for the right reasons. Real-time explainability on edge devices supports the need for immediate auditing and intervention capabilities in dynamic environments. Furthermore, user-centric XAI directly facilitates clear communication with affected individuals and oversight bodies, a cornerstone of fair and transparent AI use.

Shaping Future AI Legislation

The practical demonstration of advanced XAI capabilities from U.S. researchers provides concrete examples for policymakers to consider when drafting new AI regulations. Instead of broad, sometimes ambiguous mandates for ‘transparency,’ these breakthroughs offer specific methodologies and tools that can be incorporated into compliance frameworks.

- Evidence for Regulatory Feasibility: Demonstrating that robust explainability is achievable, even for complex models.

- Standardization Efforts: Contributing to industry-wide standards for explanation quality and comprehensibility.

- Public Trust Building: Providing mechanisms for organizations to demonstrate responsible AI deployment to the public and regulators.

The interplay between cutting-edge research and policy development is creating a virtuous cycle. Regulatory demands are spurring innovation in explainable AI, and in turn, these innovations are providing the necessary tools to implement effective and enforceable AI governance. This dynamic is particularly evident in the U.S., where various federal and state initiatives are exploring avenues for responsible AI deployment across critical sectors.

Ultimately, the progress in explainable AI research in Q1 2025 is helping to lay the groundwork for a future where AI systems are not only powerful but also understandable, trustworthy, and compliant with evolving ethical and legal standards in the United States.

Challenges and Future Directions for Explainable AI

Despite the remarkable progress in explainable AI research during Q1 2025, significant challenges remain, and new avenues for investigation are continuously emerging. The complexity of modern AI models, particularly deep learning architectures, continues to pose formidable hurdles for comprehensive explainability. Balancing the fidelity of explanations with their comprehensibility is an ongoing tightrope walk, often requiring trade-offs that researchers are actively working to optimize.

One primary challenge is the scalability of XAI methods to ever-larger and more intricate models. As models grow in size and complexity, generating meaningful and timely explanations can become computationally prohibitive. Furthermore, the inherent subjectivity in what constitutes a ‘good’ explanation means that evaluation metrics for XAI are still a topic of active debate and refinement. Different applications and different users will inevitably require different types and levels of explanation.

Overcoming Interpretability-Performance Trade-offs

A persistent concern in XAI is the perceived trade-off between model performance and interpretability. Often, the most powerful AI models are the least transparent. Future research in the U.S. is focused on developing ‘inherently interpretable’ models that achieve high performance while being transparent by design, rather than relying solely on post-hoc explanation techniques. This involves innovative architectural choices and training methodologies.

- Hybrid XAI Approaches: Combining global and local explanation techniques for a more complete picture.

- Ethical XAI: Integrating fairness and bias detection directly into explanation generation processes.

- Standardized XAI Benchmarks: Developing rigorous benchmarks to objectively compare and validate different explanation methods.

Another critical area for future work involves the explainability of multimodal AI systems, which process and integrate information from various sources like text, images, and audio. Explaining decisions made across such diverse data types presents a unique set of challenges that require novel interdisciplinary approaches. The U.S. AI research community is actively investing in these complex areas, recognizing their importance for the next generation of AI applications.

The journey towards fully transparent and trustworthy AI is long, but the breakthroughs in Q1 2025 demonstrate a clear and accelerating trajectory. Addressing these remaining challenges will be crucial for realizing the full potential of AI responsibly and ethically in the United States and globally.

Impact on U.S. Industries and Public Trust

The advancements in explainable AI research witnessed in Q1 2025 are poised to have a transformative impact across various U.S. industries and significantly bolster public trust in AI technologies. From finance to healthcare, and from legal systems to critical infrastructure, the ability to understand and verify AI decisions is becoming a non-negotiable requirement for adoption and regulatory approval.

In the financial sector, enhanced transparency in credit scoring, fraud detection, and algorithmic trading can lead to fairer outcomes and reduce systemic risks. Healthcare benefits from AI that can explain diagnostic recommendations or treatment plans, fostering collaboration between clinicians and AI systems and improving patient safety. In legal and governmental contexts, explainable AI is essential for ensuring due process and accountability in decisions affecting citizens’ lives.

Building a Foundation of Trust

Beyond specific industry applications, the overarching impact of these XAI breakthroughs is the potential to build a stronger foundation of public trust in AI. When people understand how AI systems arrive at their conclusions, they are more likely to accept and engage with these technologies. This is particularly crucial in a democratic society like the U.S., where public acceptance is vital for widespread AI integration.

- Enhanced Regulatory Compliance: Providing tools for businesses to meet existing and future transparency regulations.

- Improved AI System Development: Enabling developers to debug, refine, and build more robust and fair AI models.

- Empowered End-Users: Giving users the confidence and understanding to interact with AI responsibly.

The ongoing commitment to explainable AI research within the U.S. is a strategic investment in the future of AI. It ensures that as AI systems become more powerful and autonomous, they remain under human oversight and control, operating in ways that are both effective and ethically sound. This commitment is crucial for maintaining the U.S.’s leadership in AI innovation while simultaneously upholding societal values.

In conclusion, the Q1 2025 breakthroughs in explainable AI are not just academic achievements; they are practical advancements that will shape how AI is developed, deployed, and perceived across the United States, driving both innovation and responsible stewardship.

| Key Breakthrough | Brief Description |

|---|---|

| Causal Explainability | Moving beyond correlation to identify ‘why’ an AI decision occurred, crucial for high-stakes applications. |

| Real-time Edge XAI | Enabling immediate, resource-efficient explanations for AI models deployed on edge devices. |

| User-Centric Explanations | Tailoring AI explanations to the specific needs and comprehension levels of diverse stakeholders. |

| Regulatory Alignment | New XAI tools provide practical solutions for meeting evolving U.S. AI transparency regulations. |

Frequently Asked Questions About Explainable AI Breakthroughs

Causal explainability moves beyond correlations to identify the direct ‘why’ behind an AI model’s decision. It seeks to understand the cause-and-effect relationships within the model, providing deeper insights than traditional methods that only show feature importance. This is vital for high-stakes applications.

Real-time explainability for edge AI allows immediate understanding of decisions made on devices with limited resources. This is crucial for applications like autonomous vehicles or smart manufacturing, where instantaneous explanations are needed for safety, debugging, and human intervention without delays.

User-centric explanations tailor the form and content of AI explanations to the specific needs and technical understanding of different stakeholders. This personalized approach makes AI more accessible and understandable to a wider audience, fostering trust and enabling informed decision-making across various user groups.

These breakthroughs provide practical tools for meeting evolving U.S. AI transparency and accountability regulations. They offer concrete methodologies that policymakers can integrate into future compliance frameworks, making it more feasible for industries to adopt AI responsibly while adhering to ethical and legal standards.

Yes, significant challenges remain, including scaling XAI methods to increasingly complex models, developing robust evaluation metrics for explanation quality, and overcoming the interpretability-performance trade-off. Research continues to seek inherently interpretable models and effective explanations for multimodal AI systems.

Conclusion

The first quarter of 2025 has marked a pivotal period for explainable AI research in the United States, ushering in groundbreaking advancements in causal explainability, real-time edge explainability, and user-centric explanation tailoring. These innovations are fundamentally reshaping how AI models are understood, trusted, and governed, moving beyond mere performance metrics to embrace transparency and accountability as core tenets of AI development. As these breakthroughs mature, they will not only enhance regulatory compliance and debugging capabilities but also significantly strengthen public confidence in AI across critical U.S. industries. The commitment to making AI more understandable is a crucial step towards realizing its full potential responsibly and ethically, ensuring that powerful AI systems remain aligned with human values and oversight.