Quantum AI: 15% Faster Model Training in U.S. Labs by 2025

U.S. AI labs are projected to achieve a significant 15% acceleration in AI model training times by 2025, primarily through the strategic integration of quantum computing technologies, marking a pivotal advancement in artificial intelligence research and development.

The landscape of artificial intelligence is undergoing a profound transformation, particularly in how models are developed and refined. In the United States, leading AI laboratories are on the cusp of a revolutionary leap, with projections indicating a remarkable 15% faster model training times with new quantum computing integrations in 2025. This acceleration is not merely an incremental improvement; it signifies a paradigm shift in our ability to tackle increasingly complex AI challenges, pushing the boundaries of what is computationally possible.

The Quantum Leap: Understanding Quantum Computing’s Role in AI

Quantum computing, a fundamentally different approach to computation, leverages principles of quantum mechanics to process information. Unlike classical computers that store data as bits (0s or 1s), quantum computers use qubits, which can exist in multiple states simultaneously through superposition and entanglement. This capability allows them to perform certain calculations exponentially faster than classical machines, making them ideal candidates for accelerating complex AI tasks like model training.

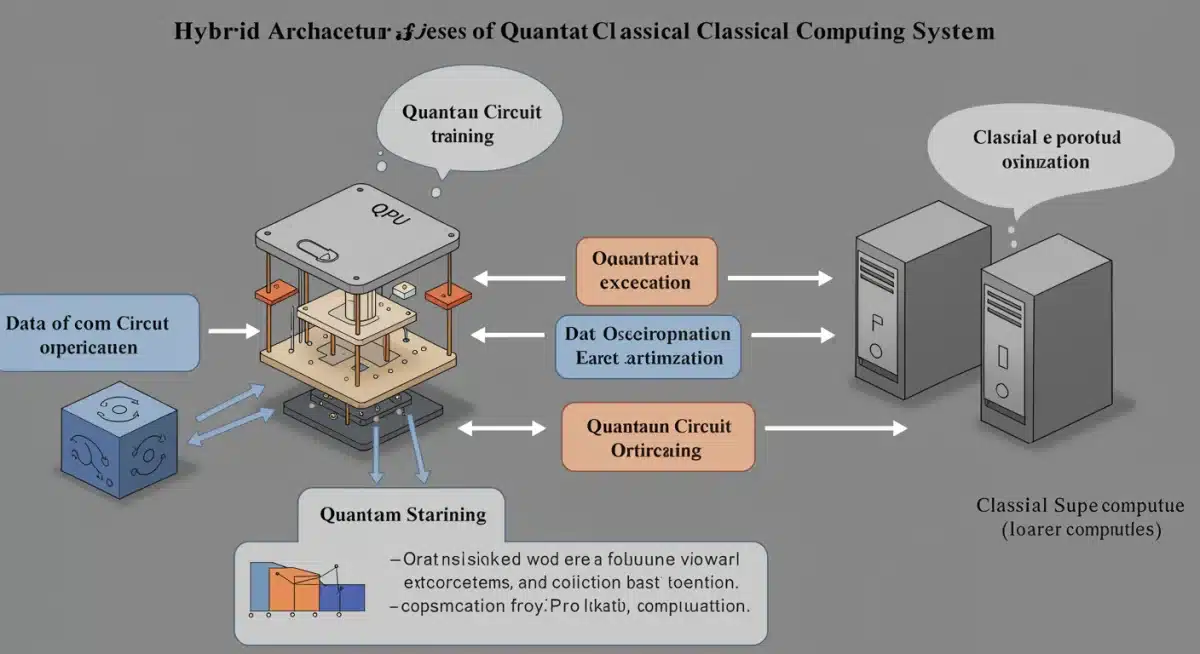

The integration of quantum computing into AI is not about replacing classical systems entirely, but rather about creating powerful hybrid architectures. These systems combine the strengths of both, offloading computationally intensive sub-routines to quantum processors while classical computers handle the overarching control and data management. This synergistic approach is proving to be the key to unlocking unprecedented speeds in AI development.

Quantum Advantage in Specific AI Algorithms

- Optimization Algorithms: Quantum annealing and quantum approximate optimization algorithms (QAOA) offer superior efficiency for problems like hyperparameter tuning and neural network architecture search, critical steps in model training.

- Linear Algebra Operations: Many machine learning algorithms heavily rely on complex linear algebra. Quantum algorithms, such as Harrow-Hassidim-Lloyd (HHL), can solve linear systems exponentially faster than classical counterparts.

- Sampling and Generative Models: Quantum supremacy in sampling tasks could significantly boost the performance and training of generative AI models, leading to more realistic and diverse outputs.

The ability of quantum computers to explore vast solution spaces simultaneously is a game-changer for AI, particularly for tasks that are intractable for even the most powerful classical supercomputers. This inherent parallelism allows for a more efficient exploration of model parameters and features, directly contributing to faster convergence during training. The U.S. is strategically investing in these capabilities to maintain its lead in the global AI race.

Accelerating Neural Network Training with Quantum Processors

Neural networks, the backbone of modern AI, require immense computational resources for training, especially deep learning models with billions of parameters. Quantum computing offers promising avenues to significantly accelerate this process by enhancing various stages of neural network training, from data encoding to weight optimization.

One of the most impactful applications lies in the realm of optimization. Training a neural network involves minimizing a loss function, often a non-convex optimization problem. Quantum optimization algorithms can navigate these complex landscapes more effectively, potentially finding global minima faster and avoiding local optima that trap classical algorithms. This leads to more robust and higher-performing models trained in less time.

Quantum-Enhanced Backpropagation and Gradient Descent

Traditional backpropagation, the workhorse of neural network training, is a classical algorithm that computes gradients to update weights. Researchers are exploring quantum analogues and hybrid approaches where quantum processors assist in calculating these gradients. For instance, quantum circuits can be designed to perform certain matrix multiplications or eigenvalue decompositions much faster, which are integral to gradient computations.

Furthermore, the ability of quantum computers to handle high-dimensional data efficiently can lead to novel ways of representing and processing information within neural networks. This could pave the way for quantum neural networks (QNNs) that inherently leverage quantum phenomena for enhanced learning capabilities, beyond just accelerating classical training methods.

- Data Embedding: Quantum circuits can embed classical data into a higher-dimensional quantum feature space, potentially making patterns more discernible for learning algorithms.

- Weight Optimization: Quantum annealing and variational quantum eigensolvers (VQE) are being adapted to find optimal sets of weights for complex neural network architectures.

- Noise Resilience: Efforts are underway to develop quantum error correction techniques that will be crucial for reliable and scalable quantum AI training in the future.

The current focus in U.S. labs is on near-term quantum devices, often called Noisy Intermediate-Scale Quantum (NISQ) computers, which still have limitations. However, even with these constraints, researchers are demonstrating proof-of-concept accelerations that indicate the immense potential for scaling up as quantum hardware matures. This incremental progress is vital for reaching the 15% faster training goal by 2025.

Key U.S. Labs Leading the Quantum AI Integration

Several prominent U.S. research institutions and corporate labs are at the forefront of integrating quantum computing with AI. These entities are not only developing cutting-edge quantum hardware but also pioneering the algorithms and software frameworks necessary to harness quantum power for AI applications.

Academic institutions like the Massachusetts Institute of Technology (MIT), Stanford University, and the University of California, Berkeley, have dedicated quantum AI research groups. These groups are exploring theoretical foundations, developing new quantum machine learning algorithms, and collaborating with hardware providers to test their innovations on real quantum processors. Their open research often forms the bedrock for commercial applications.

Industry Innovators and Their Contributions

- IBM Quantum: A leader in quantum hardware and software, IBM provides cloud access to its quantum systems, enabling AI researchers to experiment with quantum algorithms for machine learning. Their Qiskit framework is widely used for developing quantum-classical AI applications.

- Google AI Quantum: Google has made significant strides in quantum supremacy demonstrations and is actively researching how quantum processors can enhance machine learning, particularly in areas like quantum neural networks and quantum generative models.

- Microsoft Azure Quantum: Microsoft offers a comprehensive quantum ecosystem, integrating various quantum hardware providers and offering development tools. Their focus includes developing quantum-inspired algorithms that can run on classical hardware while preparing for full quantum integration.

- Amazon Braket: Amazon’s quantum computing service provides access to different quantum hardware technologies from various providers, fostering an environment for researchers to compare and develop quantum AI solutions across platforms.

Beyond these tech giants, numerous startups across the U.S. are specializing in quantum software and algorithms tailored for AI. These agile companies are often responsible for translating theoretical breakthroughs into practical tools and libraries that can be adopted by a broader AI development community. The collaborative ecosystem between academia, large corporations, and startups is crucial for the rapid advancements observed.

Technical Challenges and Solutions in Quantum-AI Integration

Despite the immense promise, integrating quantum computing into AI is fraught with technical challenges. These hurdles range from the inherent fragility of qubits to the complexities of designing efficient quantum-classical interfaces. Overcoming these obstacles is paramount to achieving the projected 15% faster training times.

One significant challenge is qubit stability and error rates. Current quantum processors are susceptible to noise, which can corrupt computations. Researchers are actively developing quantum error correction codes, though these require a large overhead of qubits. In the near term, noise mitigation techniques and robust algorithm design are critical to extract meaningful results from NISQ devices.

Bridging the Classical-Quantum Divide

Effective communication between classical and quantum components is another critical area. Data often needs to be translated from classical representations to quantum states (encoding) and vice-versa (measurement). This process needs to be efficient to avoid bottlenecks that could negate the quantum speedup. New quantum compilers and programming interfaces are being developed to streamline this interaction.

- Quantum Volume: A metric indicating the overall performance of a quantum computer, including qubit count, connectivity, and error rates. Increasing quantum volume is a key hardware development goal.

- Hybrid Algorithms: Designing algorithms that strategically partition tasks between classical and quantum processors to maximize efficiency and leverage each system’s strengths.

- Software Tooling: Developing user-friendly software development kits (SDKs) and frameworks that abstract away much of the quantum hardware complexity, making it accessible to AI researchers.

Furthermore, the sheer scale of modern AI datasets poses a challenge. Loading massive datasets into quantum memory is not yet feasible. Therefore, current approaches focus on using quantum processors for specific, data-intensive sub-problems or for processing compressed representations of data. Innovations in quantum data compression and efficient data loading mechanisms are active areas of research that will unlock greater potential.

Impact on AI Research and Development Ecosystem

The integration of quantum computing is poised to profoundly impact the entire AI research and development ecosystem in the U.S. Faster model training times translate into accelerated iteration cycles, enabling researchers to test more hypotheses, explore novel architectures, and develop more sophisticated AI models in a fraction of the time currently required.

This speedup will democratize access to advanced AI capabilities. Smaller research teams and startups, who might currently be limited by computational resources, could leverage quantum-accelerated platforms to compete with larger, well-funded organizations. It fosters an environment of rapid innovation, where new ideas can be prototyped and validated much quicker.

Emerging Opportunities and Economic Implications

The economic implications are vast. Industries ranging from finance and healthcare to manufacturing and logistics stand to benefit from more powerful and efficiently trained AI systems. For instance, in drug discovery, quantum-enhanced AI could accelerate the simulation of molecular interactions, leading to faster identification of potential new treatments.

- New AI Applications: Quantum AI could enable the development of AI models for problems currently considered intractable, opening up entirely new application domains.

- Talent Demand: There will be an increased demand for professionals skilled in both quantum computing and artificial intelligence, fostering new educational and career pathways.

- Competitive Advantage: Nations and companies that successfully integrate quantum computing into their AI strategies will gain a significant competitive edge in technological superiority.

Moreover, the ability to train AI models faster and more efficiently could lead to more energy-sustainable AI. The enormous energy consumption of large-scale AI model training is a growing concern. Quantum computing, by offering computational shortcuts, could potentially reduce the energy footprint associated with advanced AI development, aligning with broader environmental sustainability goals.

The Road Ahead: Future Prospects and Ethical Considerations

Looking beyond 2025, the trajectory of quantum-AI integration promises even more transformative advancements. As quantum hardware continues to improve in terms of qubit count, coherence times, and error rates, the scope of problems that quantum computers can tackle for AI will expand dramatically.

The development of fault-tolerant quantum computers, though still a long-term goal, will unlock the full potential of quantum algorithms for AI, enabling truly revolutionary capabilities. This future will likely see quantum processors as indispensable components in the AI development pipeline, working in concert with classical supercomputers to solve humanity’s most complex challenges.

Ethical and Societal Implications

However, with such powerful technology comes significant ethical and societal responsibilities. The ability to train highly complex AI models faster raises questions about bias, fairness, and accountability. Ensuring that quantum-enhanced AI systems are developed and deployed ethically will be paramount.

- Algorithmic Transparency: Developing methods to understand and interpret decisions made by complex quantum-enhanced AI models will be crucial for trust and accountability.

- Bias Mitigation: Quantum algorithms must be designed to avoid amplifying existing biases in data, ensuring equitable outcomes.

- Security Concerns: The power of quantum computing also presents new cybersecurity challenges, particularly in cryptography, necessitating the development of quantum-safe security protocols.

The collaborative efforts across government, academia, and industry will be essential to navigate these complexities. Establishing robust ethical guidelines, fostering public discourse, and investing in interdisciplinary research will ensure that the benefits of quantum-accelerated AI are harnessed responsibly for the betterment of society. The journey to 15% faster model training in U.S. labs by 2025 is just the beginning of a fascinating new chapter in AI.

| Key Aspect | Brief Description |

|---|---|

| Quantum-Classical Hybrid | Combining strengths of quantum processors for specific tasks with classical computers for overall control, optimizing AI model training. |

| Accelerated Optimization | Quantum algorithms like annealing and QAOA significantly speed up hyperparameter tuning and neural network weight optimization. |

| Leading U.S. Labs | IBM Quantum, Google AI Quantum, Microsoft Azure Quantum, and top universities are driving integration and research efforts. |

| Key Challenges | Overcoming qubit instability, error rates, and efficient classical-quantum data transfer are crucial for widespread adoption. |

Frequently Asked Questions About Quantum AI Training

Quantum computing’s primary contribution is its ability to perform certain complex calculations, especially optimization and linear algebra, exponentially faster than classical computers. This accelerates the computationally intensive steps in AI model training, such as hyperparameter tuning and weight optimization, leading to quicker convergence and more efficient model development.

No, quantum computers are not expected to entirely replace classical computers for AI training. Instead, the future lies in hybrid quantum-classical systems. Classical computers will handle data management and overall control, while quantum processors will be utilized for specific, highly complex sub-routines where they offer a distinct speed advantage.

Deep learning models, especially those with many parameters, and models heavily reliant on complex optimization problems, such as generative adversarial networks (GANs) and large transformer models, stand to benefit significantly. Quantum algorithms can accelerate the training of these models by finding optimal solutions faster.

Major challenges include the inherent noise and instability of current quantum processors (qubits), the difficulty of scaling up quantum hardware, and the complexity of efficiently transferring data between classical and quantum systems. Developing robust error correction and hybrid algorithms is crucial for overcoming these hurdles.

Faster AI training will lead to quicker innovation cycles, enabling researchers to develop and test more advanced models rapidly. It could democratize access to powerful AI, foster new applications across industries like healthcare and finance, and increase demand for specialized quantum AI talent, driving significant economic growth and technological advancement.

Conclusion

The ambitious goal of U.S. AI labs achieving 15% faster model training times by 2025 through quantum computing integrations is not merely a theoretical aspiration but a tangible objective driven by relentless innovation. This transformative shift, rooted in hybrid quantum-classical architectures, is set to revolutionize how artificial intelligence models are developed, refined, and deployed. While significant technical challenges remain, the concerted efforts of leading research institutions and industry players are steadily paving the way for a new era of AI, one characterized by unprecedented speed, efficiency, and capability. The implications extend far beyond mere computational gains, promising to unlock novel applications, foster economic growth, and reshape the landscape of technological advancement, all while demanding careful consideration of the ethical frameworks governing this powerful new frontier.