Federated Learning vs. Decentralized AI: 2026 Secure Model Training

Federated Learning and Decentralized AI represent two pivotal paradigms for secure model training in 2026, distinctly addressing data privacy and collaborative intelligence while offering unique architectural and operational advantages.

As we navigate 2026, the landscape of artificial intelligence is continually reshaped by innovations focused on data privacy and security. Among these, the concepts of Federated Learning vs. Decentralized AI: A 2026 Comparative Analysis for Secure Model Training have emerged as critical pillars for the next generation of AI development. Understanding their nuances is not just academic; it’s essential for anyone looking to harness AI’s power responsibly and effectively in an increasingly data-sensitive world.

Understanding Federated Learning: A Collaborative Privacy Paradigm

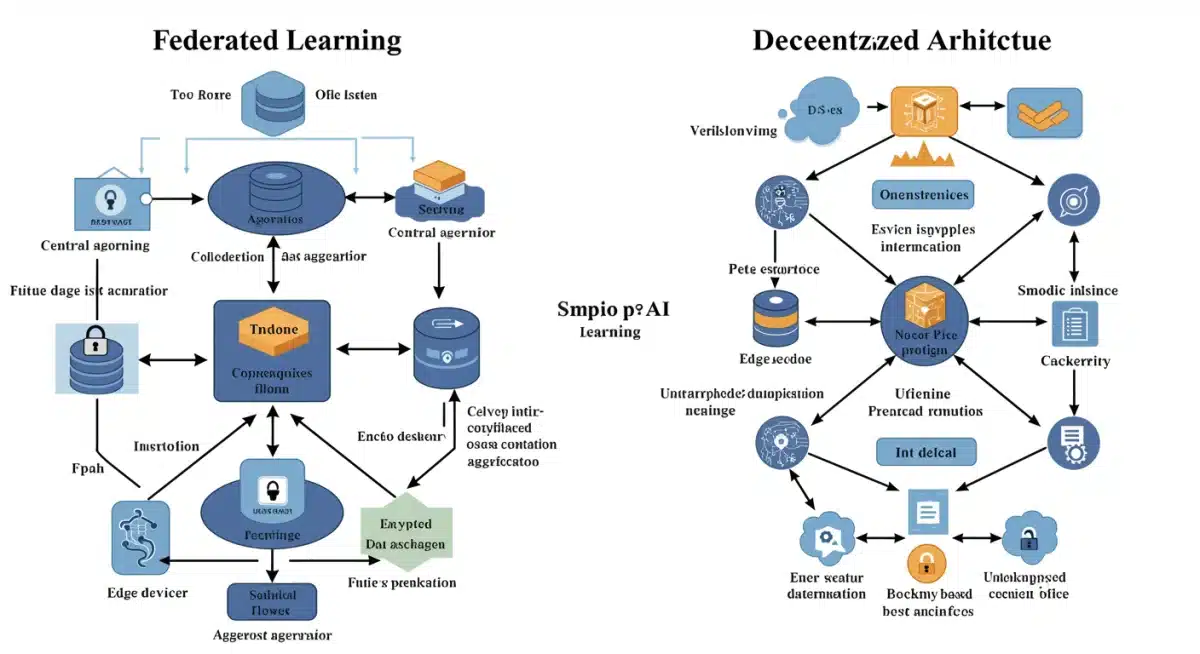

Federated Learning stands as a groundbreaking approach that enables multiple entities to collaboratively train a shared machine learning model without directly exchanging their raw data. This method fundamentally shifts the paradigm of data privacy in AI. Instead of centralizing sensitive information, federated learning distributes the training process, allowing local models to learn from local datasets.

The core principle involves a central server orchestrating the learning process. Each participant downloads the current global model, trains it on their private data, and then sends only the updated model parameters (not the data itself) back to the server. The server then aggregates these updates to create a new, improved global model, which is then redistributed. This iterative process ensures that sensitive information remains on the local devices or servers, significantly enhancing privacy.

Key Components of Federated Learning

- Central Aggregator: Coordinates the training process, distributes global models, and aggregates local updates.

- Local Clients: Devices or organizations holding private datasets, responsible for local model training.

- Model Updates: Only model parameters, not raw data, are shared with the central server.

- Iterative Process: Continuous cycles of model distribution, local training, and aggregation.

The benefits of federated learning are profound, particularly in sectors like healthcare, finance, and mobile computing, where data privacy regulations are stringent. It allows for the development of robust AI models that leverage vast amounts of distributed data while adhering to privacy mandates like GDPR and HIPAA. However, challenges persist, including communication overhead, potential for data poisoning attacks, and ensuring fairness across diverse client datasets. The evolution of federated learning in 2026 is focused on mitigating these issues through advanced encryption techniques and more robust aggregation algorithms.

In essence, federated learning offers a powerful framework for collaborative AI development where data privacy is paramount, demonstrating its increasing relevance in today’s interconnected digital ecosystem.

Decentralized AI: The Autonomous and Distributed Approach

Decentralized AI, while also focused on distributed intelligence, operates on a fundamentally different architectural principle compared to federated learning. Instead of relying on a central orchestrator, decentralized AI systems distribute both data and computational tasks across a network of independent nodes, often leveraging blockchain or peer-to-peer technologies. This approach fosters true autonomy and can significantly enhance resilience and security by eliminating single points of failure.

In a decentralized AI system, individual nodes or agents collaborate to achieve a common goal without a hierarchical structure. Each participant might contribute computational resources, data, or even specific model components. The coordination among these nodes often occurs through consensus mechanisms, similar to those found in blockchain networks, ensuring data integrity and agreement on shared states or model parameters.

Architectural Pillars of Decentralized AI

- Peer-to-Peer Networks: Direct communication and collaboration between nodes without a central authority.

- Blockchain Integration: Used for immutable record-keeping, secure transactions, and consensus mechanisms.

- Distributed Ledger Technology (DLT): Ensures transparency and traceability of data and model interactions.

- Autonomous Agents: Individual AI entities capable of making decisions and interacting independently.

The appeal of decentralized AI lies in its potential for enhanced security, censorship resistance, and scalability. By removing central points of control, it becomes significantly harder for malicious actors to compromise the entire system. Furthermore, the inherent redundancy in distributed networks makes them highly resilient to outages. However, decentralized AI faces its own set of hurdles, such as managing complex communication protocols, ensuring computational efficiency across diverse hardware, and preventing Sybil attacks.

By 2026, advancements in distributed ledger technologies and multi-agent systems are making decentralized AI a more viable and attractive option for applications requiring extreme security and resilience, from supply chain optimization to advanced robotic systems.

Core Differences in Architecture and Control

The architectural divergence between federated learning and decentralized AI is perhaps their most defining characteristic. Federated learning, despite distributing data processing, still operates within a centralized coordination framework. A central server dictates the training rounds, aggregates models, and manages the overall process. This central entity, while not directly accessing raw data, holds significant control over the model’s evolution and the participation of clients.

Conversely, decentralized AI fundamentally rejects this central authority. Its architecture is characterized by a flat, peer-to-peer network where each node possesses a degree of autonomy. Decision-making, data sharing, and model updates are often managed through distributed consensus mechanisms, eliminating the need for a single point of control. This distinction impacts everything from system resilience to governance models.

Centralization vs. Decentralization in Practice

Consider a practical scenario: In federated learning for medical image analysis, a hospital’s data remains local, but a central research institution aggregates the model updates. The institution maintains control over the global model’s parameters and the training schedule. If the central server goes down, the entire training process halts.

In a decentralized AI equivalent, multiple hospitals could collaborate directly, exchanging encrypted model insights or even training small, specialized models that interact autonomously. There’s no single point of failure; if one hospital node is offline, the others can continue their operations. The coordination might be managed by a blockchain, ensuring secure and verifiable interactions without a trusted intermediary.

This difference in control mechanisms leads to distinct implications for trust, scalability, and security. Federated learning relies on the trustworthiness of the central aggregator, while decentralized AI aims to minimize trust in any single entity through cryptographic proofs and distributed consensus. The choice between these architectures often hinges on the specific project requirements, regulatory environment, and the acceptable level of centralization.

Data Privacy and Security Mechanisms Compared

Both federated learning and decentralized AI prioritize data privacy and security, but they achieve these goals through different mechanisms. Federated learning’s primary privacy safeguard is keeping raw data localized. It relies on the principle that only model parameters, which are often aggregated and anonymized, leave the local environment. This approach significantly reduces the risk of data breaches associated with centralized data storage.

However, federated learning is not entirely immune to privacy concerns. Advanced adversarial attacks can potentially infer sensitive information from model updates, even if direct data access is prevented. To counter this, techniques like differential privacy and secure multi-party computation (SMC) are often integrated into federated learning frameworks. Differential privacy adds noise to the model updates, making it harder to reconstruct individual data points, while SMC allows computations on encrypted data without revealing the underlying values.

Decentralized AI’s Robust Security Layers

Decentralized AI, particularly when built on blockchain, inherently offers robust security features. The immutability of distributed ledgers means that once data or model updates are recorded, they cannot be altered, providing a high degree of integrity and auditability. Cryptographic hashing and digital signatures ensure the authenticity of participants and the validity of transactions. Furthermore, the distributed nature of the network means there is no single point of attack for data exfiltration.

For instance, in a decentralized AI system, the provenance of training data or the lineage of a model’s development can be transparently tracked on a blockchain, building trust among participants. While federated learning focuses on preventing data from leaving its source, decentralized AI focuses on securing the entire computational and data-sharing process through cryptographic guarantees and distributed consensus. Both approaches are vital for secure model training in 2026, with their applicability often depending on the specific threat model and regulatory landscape.

Scalability and Performance Considerations in 2026

Scalability and performance are crucial factors influencing the adoption of both federated learning and decentralized AI. Federated learning, with its central aggregator, can face bottlenecks as the number of participating clients grows. The central server must handle the communication and aggregation of updates from potentially millions of devices. This can lead to significant communication overhead and computational demands on the aggregator.

Optimizing communication efficiency is a major research area in federated learning for 2026. Techniques such as sparsification, quantization, and compression of model updates are being developed to reduce bandwidth requirements. Additionally, hierarchical federated learning, where multiple aggregators manage subsets of clients, is gaining traction to improve scalability. Despite these advancements, the inherent centralized aggregation step remains a potential performance constraint.

Decentralized AI’s Scalability Challenges and Solutions

Decentralized AI, while theoretically highly scalable due to its distributed nature, faces its own set of performance challenges. The consensus mechanisms used in blockchain-based decentralized AI, such as Proof of Work or Proof of Stake, can be computationally intensive and slow, impacting transaction throughput and overall system latency. This is a well-known hurdle for many blockchain applications.

However, significant progress is being made in 2026 to enhance the scalability of decentralized AI. Sharding techniques, where the network is divided into smaller, parallel processing units, are being explored to increase transaction capacity. Layer-2 scaling solutions, which process transactions off-chain before settling them on the main chain, also offer promising avenues for improving performance. Furthermore, the development of more efficient peer-to-peer communication protocols and lightweight consensus algorithms is critical for enabling large-scale decentralized AI deployments. While both paradigms are evolving to meet the demands of growing AI applications, their approaches to scalability are distinct and continue to be areas of active research and development.

Key Applications and Future Trajectories

The distinct characteristics of federated learning and decentralized AI naturally lend themselves to different application domains, though some overlap exists. Federated learning has found widespread adoption in scenarios where data is inherently siloed across numerous entities, and privacy regulations prohibit direct data sharing. Its impact is particularly notable in:

- Healthcare: Training diagnostic models using patient data from multiple hospitals without compromising individual privacy.

- Mobile Devices: Improving predictive text, voice recognition, and personalized recommendations on smartphones by learning from user interactions locally.

- Financial Institutions: Developing fraud detection models by leveraging transaction data from various banks while maintaining client confidentiality.

By 2026, federated learning is expected to expand into smart cities, autonomous vehicles, and industrial IoT, where vast amounts of localized data can be leveraged for collective intelligence without centralizing sensitive information. The focus will be on making these systems more robust against adversarial attacks and improving the efficiency of model aggregation.

Emerging Frontiers for Decentralized AI

Decentralized AI is poised to revolutionize applications requiring extreme resilience, transparency, and trustless collaboration. Its future trajectory is closely tied to the advancements in blockchain and distributed ledger technologies. Key application areas include:

- Decentralized Autonomous Organizations (DAOs): Enabling AI agents to govern and operate organizations transparently and autonomously.

- Data Marketplaces: Creating secure and verifiable platforms for data sharing and monetization, where AI models can be trained on ethically sourced data with clear provenance.

- Edge AI and IoT: Deploying AI models directly on edge devices with enhanced security and reduced reliance on cloud infrastructure, fostering truly distributed intelligence.

In 2026, we anticipate decentralized AI playing a critical role in developing AI systems that are more resistant to censorship, manipulation, and single points of failure, particularly in critical infrastructure and sensitive government applications. The convergence of these two paradigms, where federated learning might be implemented on a decentralized infrastructure, could also unlock new possibilities for secure and collaborative AI.

Challenges and Opportunities for Secure Model Training

Both federated learning and decentralized AI present unique challenges and opportunities in the quest for secure model training. For federated learning, a significant challenge remains the potential for data poisoning, where a malicious client could send corrupted model updates to the central server, thereby degrading the global model’s performance or introducing backdoors. Research in 2026 is actively exploring robust aggregation techniques and anomaly detection methods to identify and mitigate such threats. Another opportunity lies in integrating advanced cryptographic techniques, such as homomorphic encryption, to further secure model updates during aggregation, ensuring that even the central server cannot infer sensitive information.

The ethical implications of federated learning also present a complex challenge. Ensuring fairness across diverse client datasets and preventing biases from being amplified in the global model is crucial. Opportunities in this area involve developing explainable AI (XAI) tools that can elucidate how local contributions impact the global model, fostering greater transparency and trust.

Navigating the Decentralized AI Landscape

Decentralized AI, while offering significant security advantages, faces challenges related to consensus overhead and the complexity of managing a truly distributed network. Ensuring that all participating nodes maintain data consistency and agree on model states without a central authority requires sophisticated algorithms and protocols. The opportunity here lies in the development of more efficient and scalable consensus mechanisms that can support the high computational demands of AI model training.

Furthermore, the legal and regulatory frameworks for decentralized AI are still evolving. Clarifying data ownership, liability, and governance in a system without a central controlling entity presents both a challenge and an opportunity to shape future digital policies. The potential for decentralized AI to create truly open and democratic AI ecosystems, where power is distributed rather than concentrated, represents a transformative opportunity for the future of technology and society. Overcoming these challenges will pave the way for more resilient, private, and ethically sound AI systems.

| Key Aspect | Description |

|---|---|

| Architectural Model | Federated Learning: Centralized aggregation. Decentralized AI: Peer-to-peer network. |

| Data Privacy | FL: Data local, model updates shared. D-AI: Cryptographic proofs, distributed ledger for integrity. |

| Control & Trust | FL: Relies on central aggregator trust. D-AI: Trustless, distributed consensus. |

| Scalability | FL: Central server bottleneck. D-AI: Consensus overhead, but high resilience. |

Frequently Asked Questions About Secure AI Training

Federated Learning keeps raw data on local devices, sharing only aggregated model updates with a central server. Decentralized AI, conversely, distributes both data and computation across a peer-to-peer network, often using blockchain for secure, trustless interactions without a central authority.

Federated Learning enhances privacy by ensuring sensitive data never leaves its source, reducing central data breach risks. Decentralized AI uses cryptographic techniques and distributed ledgers to ensure data integrity, immutability, and transparency, making it resistant to censorship and single points of failure.

Both are highly suitable, but Federated Learning is currently more mature for direct application in healthcare due to its ability to leverage existing infrastructure with a clear regulatory compliance path for local data processing. Decentralized AI is gaining ground for its enhanced security and auditability.

Federated Learning faces scalability issues with central server communication overhead as client numbers grow. Decentralized AI contends with the computational intensity and latency of its distributed consensus mechanisms, though advancements are being made in both areas to address these limitations.

Yes, combining them represents a promising future. Federated Learning could operate on a decentralized infrastructure, where the central aggregator’s role is replaced by a distributed consensus mechanism, offering the privacy benefits of FL with the enhanced security and resilience of decentralized networks.

Conclusion

The comparative analysis of federated learning and decentralized AI in 2026 reveals two distinct yet complementary pathways for secure model training. Federated learning excels in collaborative environments where data privacy is paramount and a degree of centralized coordination is acceptable, while decentralized AI offers unparalleled resilience, transparency, and trustlessness through truly distributed architectures. Both paradigms are critical for advancing AI in a privacy-conscious and secure manner, offering robust solutions to the complex challenges of data governance and model integrity. As AI continues to evolve, understanding the strengths and weaknesses of each will be essential for developing intelligent systems that are not only powerful but also ethical and secure.