AI Model Interpretability: New US Federal Guidelines Expected by Q3 2025

New federal guidelines for AI model interpretability are expected by Q3 2025, creating an urgent need for organizations to prioritize transparent and understandable AI systems to ensure fairness, accountability, and public trust.

The landscape of artificial intelligence is evolving at an unprecedented pace, bringing with it both immense opportunity and significant challenges. One of the most pressing concerns in this rapidly advancing field is AI model interpretability guidelines, especially with new federal guidelines anticipated by Q3 2025. This time-sensitive development means organizations across the United States must act swiftly to understand and prepare for these upcoming regulations, ensuring their AI systems are not only effective but also transparent and accountable.

understanding the core of AI interpretability

AI interpretability refers to the ability to explain or present the workings of an AI model in understandable terms to humans. As AI systems become more complex and integrated into critical applications, the demand for transparency grows. This is not merely a technical challenge but a fundamental requirement for trust, fairness, and accountability in AI deployment.

The push for interpretability stems from several key areas. Firstly, it allows developers and stakeholders to debug models more effectively, identifying biases or errors that might otherwise remain hidden. Secondly, it builds user confidence, particularly in sensitive sectors like healthcare or finance, where decisions have profound real-world impacts. Finally, regulatory bodies are increasingly recognizing interpretability as a cornerstone of responsible AI governance.

why interpretability matters now more than ever

- Ethical AI Development: Ensuring AI systems align with human values and avoid perpetuating societal biases.

- Regulatory Compliance: Meeting forthcoming federal standards and avoiding potential legal repercussions.

- Enhanced Trust: Building confidence among users, stakeholders, and the general public in AI-driven decisions.

- Improved Debugging: Pinpointing and rectifying errors or unexpected behaviors within complex AI models.

The urgency surrounding AI interpretability is underscored by the rapid integration of AI into daily life. From loan applications to medical diagnoses, AI models are making decisions that directly affect individuals. Without clear explanations of how these decisions are reached, there is a significant risk of opaque systems perpetuating unfairness or discrimination. This lack of transparency can erode public trust and hinder the widespread adoption of beneficial AI technologies. Therefore, understanding and implementing interpretability techniques are no longer optional but essential for any organization leveraging AI.

the impending federal guidelines: what to expect by Q3 2025

The United States government is actively working to establish comprehensive federal guidelines for AI, with a particular focus on interpretability, transparency, and accountability. These guidelines are expected to be formalized and released by Q3 2025, marking a significant shift in how AI models are developed, deployed, and monitored across various sectors. The anticipation of these regulations signals a maturing stance on AI governance, moving beyond abstract discussions to concrete requirements.

While the exact details are still under wraps, initial indications suggest that the guidelines will likely cover a broad spectrum of AI applications, from those used in critical infrastructure to consumer-facing services. The primary goal is to ensure that AI systems are not only effective but also understandable, auditable, and fair. This proactive approach aims to foster innovation responsibly, mitigating potential risks associated with opaque AI models.

key areas likely to be addressed

- Transparency Requirements: Mandating clearer documentation of AI model design, training data, and decision-making processes.

- Explainability Standards: Requiring mechanisms to explain AI outputs, especially for high-stakes decisions.

- Bias Detection and Mitigation: Emphasizing methodologies to identify and address algorithmic biases.

- Auditability and Accountability: Establishing frameworks for auditing AI systems and assigning responsibility for their outcomes.

Organizations should prepare for a regulatory environment that demands a higher degree of diligence in their AI development lifecycle. This includes implementing robust data governance practices, adopting explainable AI (XAI) techniques, and fostering a culture of ethical AI. The Q3 2025 deadline is not just a date but a call to action for businesses to align their AI strategies with future federal mandates, ensuring compliance and maintaining their competitive edge.

impact on various sectors: healthcare, finance, and beyond

The upcoming federal guidelines on AI model interpretability guidelines will reverberate across numerous industries, with some sectors experiencing a more profound and immediate impact due to the sensitive nature of their operations. Healthcare and finance, in particular, stand at the forefront of this regulatory shift, given their reliance on AI for critical decision-making that directly affects individuals’ well-being and financial stability.

In healthcare, AI is increasingly used for diagnostics, personalized treatment plans, and drug discovery. The ability to interpret an AI model’s reasoning becomes paramount when a patient’s life is on the line. Doctors need to understand why an AI recommended a particular diagnosis or treatment to ensure patient safety and build trust. Similarly, in finance, AI-driven algorithms determine credit scores, loan approvals, and fraud detection. Explaining these decisions is crucial for consumer protection and preventing discriminatory practices. The new guidelines will likely mandate a high level of transparency in these areas.

Beyond these high-stakes sectors, other industries will also feel the ripple effect. In manufacturing, AI optimizes supply chains and predictive maintenance; interpretability can help engineers understand system failures. In legal tech, AI assists with document review and case prediction; transparency ensures fairness and due process. The common thread is the need for clarity and accountability, ensuring that AI serves humanity ethically and effectively. The guidelines will likely establish a baseline for all AI systems, fostering a universal standard of responsible AI deployment.

sector-specific considerations

- Healthcare: Clinical decision support systems must provide clear rationales for recommendations to aid medical professionals.

- Finance: Credit scoring and fraud detection algorithms require detailed explanations to justify decisions and ensure non-discrimination.

- Legal: AI tools supporting legal processes need transparent logic to uphold principles of justice and fairness.

- Retail: Recommendation engines and pricing algorithms should be auditable to prevent consumer manipulation or unfair practices.

The proactive engagement of sector-specific stakeholders will be vital in shaping the practical implementation of these guidelines. Each industry faces unique challenges and opportunities regarding AI, and a tailored approach to interpretability will be necessary to balance compliance with innovation. The overarching goal remains to ensure that AI’s transformative power is harnessed responsibly across all domains.

strategies for organizations to prepare for the 2025 deadline

With the Q3 2025 deadline fast approaching for new federal guidelines on AI model interpretability guidelines, organizations must begin implementing proactive strategies to ensure compliance and maintain operational integrity. Waiting until the last minute could result in significant disruptions, costly retrofits, and potential regulatory penalties. A comprehensive approach involves technical, organizational, and cultural shifts.

Firstly, a thorough assessment of existing AI systems is crucial. This involves identifying which models are currently in use, their level of interpretability, and their potential exposure to regulatory scrutiny. Understanding the current state allows for targeted improvements. Secondly, investing in explainable AI (XAI) tools and techniques is paramount. These tools can help shed light on the inner workings of complex models, making their decisions more understandable to human operators and auditors.

actionable steps for preparation

- Inventory AI Systems: Document all AI models in use, their purpose, data sources, and current interpretability levels.

- Invest in XAI Tools: Adopt technologies like SHAP, LIME, and feature importance analyses to enhance model transparency.

- Develop Internal Policies: Create clear guidelines for AI development, deployment, and monitoring that prioritize interpretability and ethics.

- Train Staff: Educate data scientists, engineers, and leadership on interpretability principles and new regulatory requirements.

- Engage Legal Counsel: Consult with legal experts to understand potential liabilities and ensure compliance with emerging regulations.

Beyond technical solutions, organizations need to foster a culture that values transparency and ethical AI. This includes establishing internal governance structures, such as AI ethics committees, to oversee development and deployment. Regular audits and reviews of AI models will become standard practice, ensuring ongoing compliance and continuous improvement. By adopting a holistic strategy, businesses can navigate the evolving regulatory landscape successfully and emerge stronger, with more trustworthy and responsible AI systems.

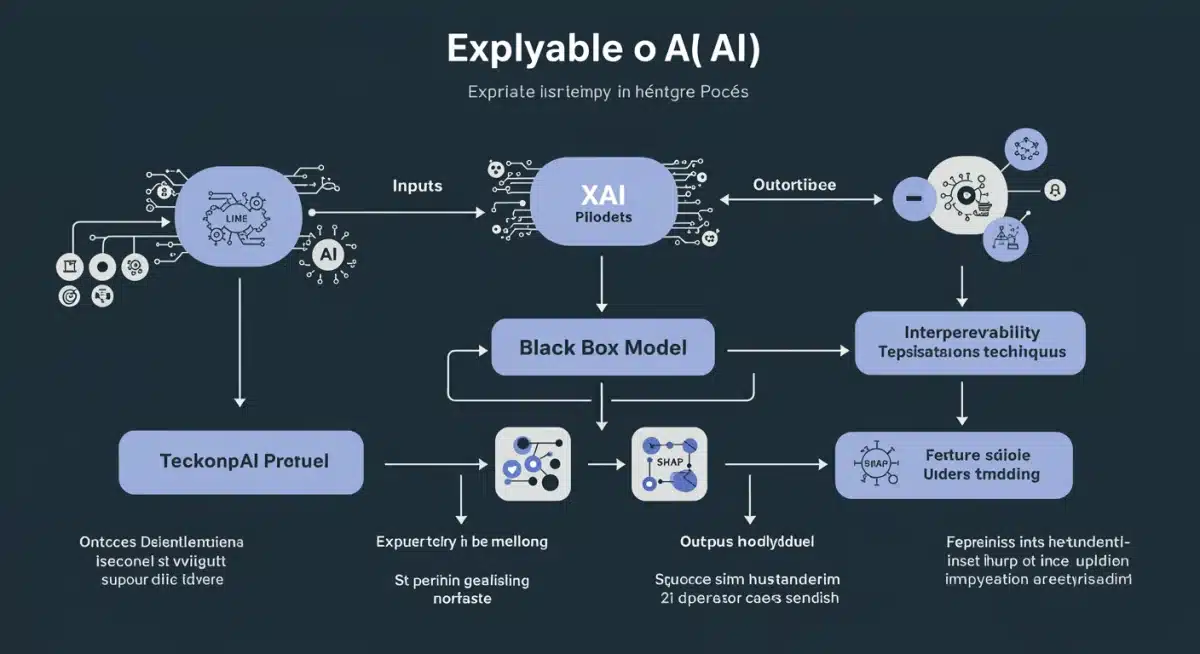

the role of explainable AI (XAI) in achieving compliance

Explainable AI (XAI) is not just a buzzword; it is a critical set of methodologies and tools that will be instrumental in helping organizations meet the forthcoming federal AI model interpretability guidelines. As AI models become increasingly sophisticated and opaque, XAI provides the necessary bridge between complex algorithms and human understanding, a requirement that will soon be mandated by law. Integrating XAI into the AI development lifecycle is no longer an option but a strategic imperative.

XAI techniques aim to make AI decisions transparent, allowing stakeholders to understand why a model made a particular prediction or classification. This is crucial for debugging, identifying biases, and building trust. For instance, techniques like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) can provide insights into feature importance and local decision boundaries, offering granular explanations for individual predictions. These tools move beyond simply stating an outcome, providing a narrative of the AI’s reasoning process.

key XAI techniques and their benefits

- SHAP Values: Quantify the contribution of each feature to a prediction, offering global and local interpretability.

- LIME: Explains the predictions of any classifier by approximating it locally with an interpretable model.

- Feature Importance: Ranks features based on their impact on model output, highlighting key drivers.

- Decision Trees/Rules: Intrinsically interpretable models that provide clear, human-readable decision paths.

Implementing XAI involves more than just applying a few tools; it requires a paradigm shift in how AI is designed and evaluated. It means incorporating interpretability considerations from the initial data collection phase through model deployment and ongoing monitoring. Organizations that proactively adopt XAI will not only be better positioned for regulatory compliance but will also develop more robust, reliable, and trustworthy AI systems. This commitment to explainability will differentiate leaders in the AI space and foster greater public confidence in AI technologies.

long-term benefits of embracing interpretable AI

While the immediate impetus for focusing on AI model interpretability guidelines is the impending Q3 2025 federal deadline, the long-term benefits of embracing interpretable AI extend far beyond mere compliance. Organizations that integrate transparency and explainability into their AI strategies will gain significant competitive advantages, foster greater innovation, and build enduring trust with their customers and the public.

One of the primary long-term advantages is enhanced model reliability and robustness. Interpretable models are easier to debug, allowing developers to identify and correct errors, biases, or vulnerabilities more efficiently. This leads to more stable and dependable AI systems that perform consistently over time. Furthermore, understanding the reasoning behind AI decisions facilitates continuous improvement, as insights gained from interpretability can inform model retraining and refinement, leading to superior performance and adaptability.

Moreover, transparent AI systems significantly boost user confidence and adoption. When users understand how an AI arrives at its conclusions, they are more likely to trust and utilize these technologies. This is particularly crucial in sensitive applications where skepticism might otherwise hinder acceptance. By fostering trust, organizations can accelerate the integration of AI into new products and services, driving innovation and market leadership. Interpretable AI also supports ethical governance, allowing for clearer accountability and reducing the risk of unintended societal harms, which in turn protects brand reputation and fosters a positive public image.

enduring advantages of transparent AI

- Increased Trust and Adoption: Users are more likely to embrace AI solutions they understand and trust.

- Improved Model Performance: Easier debugging and continuous refinement lead to more accurate and reliable models.

- Enhanced Innovation: Clearer understanding of AI workings can spark new ideas and applications.

- Stronger Ethical Governance: Supports accountability, reduces bias, and protects against reputational damage.

- Competitive Differentiation: Organizations known for ethical and transparent AI will attract top talent and customers.

Ultimately, investing in AI interpretability is an investment in the future of responsible AI. It positions organizations not just as compliant entities, but as leaders in ethical innovation, capable of harnessing AI’s full potential while upholding societal values. The Q3 2025 deadline is merely the beginning of a journey towards a more transparent, accountable, and beneficial AI ecosystem.

| Key Aspect | Brief Description |

|---|---|

| Deadline Urgency | New federal AI interpretability guidelines expected by Q3 2025, mandating proactive preparation. |

| Core Interpretability | Ability to explain AI model workings for trust, fairness, and accountability. |

| Impacted Sectors | Healthcare, finance, and others face significant changes due to critical AI applications. |

| XAI Role | Explainable AI (XAI) tools and techniques are crucial for achieving compliance and transparency. |

Frequently Asked Questions about AI Interpretability Guidelines

AI model interpretability guidelines are a set of rules and standards that dictate how AI systems should be designed, developed, and deployed to ensure their decision-making processes are understandable and auditable by humans. These guidelines aim to promote transparency, fairness, and accountability in AI applications, especially in critical sectors.

The urgency stems from the Q3 2025 deadline for their expected release. Organizations need to proactively adapt their AI systems to comply with these impending regulations, avoiding potential legal issues, financial penalties, and reputational damage. Early preparation ensures a smoother transition and continued operational integrity.

Industries heavily reliant on AI for critical decisions, such as healthcare, finance, and legal services, will experience the most significant impact. However, the guidelines are expected to have broad applicability, affecting any sector that deploys AI models with real-world implications, including manufacturing and retail.

XAI provides the tools and techniques necessary to make complex AI models understandable. By using methods like SHAP and LIME, organizations can explain model predictions, identify biases, and demonstrate transparency, which are core requirements for compliance with the anticipated federal interpretability guidelines.

Beyond compliance, embracing AI interpretability leads to increased trust, improved model reliability, enhanced innovation, and stronger ethical governance. It fosters a competitive advantage by building public confidence in AI technologies, attracting talent, and ensuring responsible AI deployment for sustained success.

Conclusion

The impending federal guidelines on AI model interpretability guidelines, expected by Q3 2025, represent a pivotal moment for the artificial intelligence landscape in the United States. This time-sensitive development underscores the critical need for organizations to prioritize transparency, accountability, and ethical considerations in their AI systems. By proactively assessing existing models, investing in Explainable AI (XAI) tools, and fostering a culture of responsible AI development, businesses can not only ensure compliance but also unlock long-term benefits such as enhanced trust, improved model performance, and sustained innovation. The future of AI is not just about intelligence, but about understandable intelligence, building a foundation of confidence and ethical practice for the years to come.