Ethical AI Development: Reducing Bias by 15% in Models

Implementing targeted data preprocessing, rigorous model evaluation, and continuous monitoring can effectively reduce bias in AI models by 15% within three months, fostering more equitable and reliable systems.

In today’s rapidly evolving technological landscape, the imperative for ethical AI development: reducing bias by 15% in your models within 3 months (practical solutions) has become a critical challenge for organizations worldwide. As artificial intelligence systems become more integrated into our daily lives, ensuring their fairness and impartiality is no longer just a regulatory concern but a fundamental ethical responsibility. This article delves into actionable strategies to achieve measurable bias reduction, empowering you to build more trustworthy and effective AI.

Understanding AI Bias: Root Causes and Impact

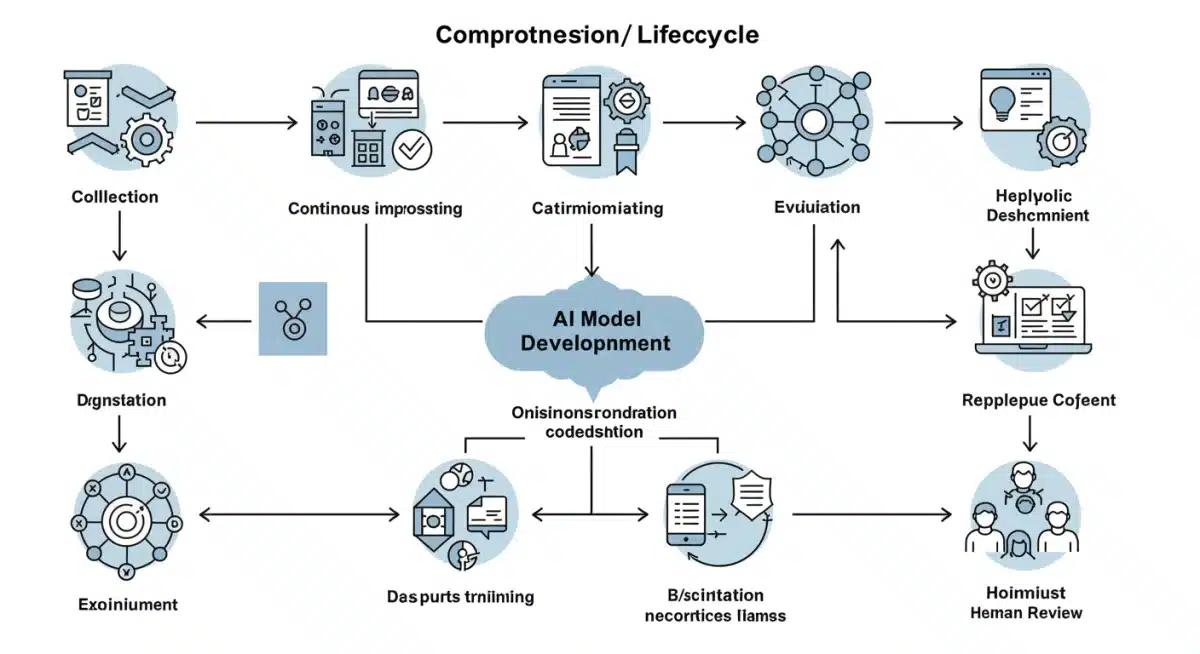

AI bias, often an unintended consequence, arises from various stages of the development lifecycle, from data collection to model deployment. Recognizing its origins is the first step toward effective mitigation and achieving significant reductions.

The impact of biased AI can be profound, leading to discriminatory outcomes, erosion of trust, and significant reputational and financial costs for organizations. Addressing these root causes systematically is essential for building ethical AI systems that serve all users equitably.

Data Collection and Preparation: The Foundation of Fairness

Bias often begins long before a model is trained, primarily during data collection and preparation. If the data used to train an AI model is unrepresentative, incomplete, or reflects societal prejudices, the model will inevitably learn and perpetuate those biases.

- Representative Sampling: Ensure your datasets accurately reflect the diversity of the population the AI system will serve.

- Data Augmentation: Strategically augment underrepresented classes to balance dataset distribution.

- Feature Engineering Review: Scrutinize features for proxies of sensitive attributes that could introduce indirect bias.

Algorithmic Bias: Inherited and Amplified

Beyond data, the algorithms themselves can introduce or amplify bias. Certain algorithms might prioritize specific patterns or features, inadvertently leading to unfair outcomes for particular groups. This is especially true for complex deep learning models where interpretability is often a challenge.

Understanding the mathematical foundations and architectural choices of your algorithms is crucial. Regularly reviewing and selecting algorithms known for their fairness-aware properties can significantly contribute to bias reduction efforts.

Establishing a Baseline and Setting Measurable Goals

Before embarking on any bias reduction initiative, it’s crucial to establish a clear baseline of your current model’s performance concerning fairness. This involves identifying potential sources of bias and quantifying their impact on different demographic groups.

Setting a measurable goal, such as reducing bias by 15% within three months, provides a concrete target and allows for effective tracking of progress. This objective approach ensures that efforts are focused and results-driven.

Identifying Key Bias Metrics

Various fairness metrics exist, each offering a different perspective on bias. Selecting the right metrics depends on the specific context and potential impact of your AI system.

- Demographic Parity: Ensures that positive outcomes are equally likely across different groups.

- Equal Opportunity: Focuses on equal true positive rates for different groups.

- Predictive Equality: Aims for equal false positive rates across groups.

It is important to understand that no single metric can capture all aspects of fairness, and often, there are trade-offs between them. A multi-metric approach provides a more comprehensive view.

Benchmarking Current Performance

Once metrics are chosen, benchmark your existing AI models against these fairness criteria. This involves analyzing predictions across different sensitive attributes (e.g., gender, race, age) to identify disparities.

Documenting these baseline measurements is vital for demonstrating the 15% reduction target. This initial assessment provides the necessary data to justify interventions and track their effectiveness over time.

Data-Centric Strategies for Bias Mitigation

The most effective path to reducing AI bias often begins with the data. Implementing robust data-centric strategies can address imbalances and ensure that your models learn from a more equitable representation of the world.

These strategies are not one-time fixes but require continuous effort and monitoring. A proactive approach to data quality and diversity is fundamental for sustainable bias reduction.

Pre-processing Techniques: Cleaning and Balancing Data

Pre-processing techniques aim to mitigate bias before the model even sees the data. These methods are often highly effective and can yield significant improvements.

- Resampling: Over-sampling minority classes or under-sampling majority classes to balance the dataset.

- Re-weighting: Assigning different weights to data points to emphasize underrepresented groups.

- Data Anonymization: Removing or masking sensitive identifiable information to prevent direct discriminatory use.

Careful application of these techniques is crucial to avoid introducing new biases or diminishing the overall utility of the dataset. Regular review of the impact of these changes is recommended.

Augmenting and Synthesizing Data for Diversity

When real-world data is scarce or inherently biased, data augmentation and synthesis can be powerful tools. These techniques create new data points that increase representation and diversity.

Synthetic data generation, when done responsibly, can help fill gaps in real datasets without compromising privacy. However, it’s essential to validate that synthetic data accurately reflects real-world distributions and doesn’t inadvertently introduce new biases.

Model-Centric Approaches: Fairer Algorithms and Training

While data is paramount, modifying the AI model and its training process can also play a significant role in reducing bias. These approaches often involve algorithmic adjustments or incorporating fairness constraints during training.

Integrating fairness objectives directly into the model training process can lead to models that are not only accurate but also inherently more equitable in their predictions.

In-processing Techniques: Adjusting During Training

In-processing methods modify the learning algorithm itself to promote fairness. These techniques intervene during the model training phase to influence how the model learns from the data.

- Adversarial Debiasing: Training an adversary to predict sensitive attributes, forcing the main model to learn representations that are independent of these attributes.

- Regularization: Adding fairness-aware regularization terms to the loss function, penalizing models that exhibit biased behavior.

- Fairness Constraints: Directly imposing constraints on the model’s predictions to satisfy specific fairness criteria during optimization.

The choice of in-processing technique often depends on the specific type of bias being addressed and the computational resources available. Experimentation and validation are key.

Post-processing Techniques: Rectifying Predictions

Even after a model is trained, post-processing techniques can adjust its predictions to improve fairness. These methods are applied to the model’s output without altering the model itself.

For example, threshold adjustment can be used to equalize positive prediction rates across different groups. While effective, post-processing should ideally be a last resort, as addressing bias further upstream in the development pipeline is generally more robust.

Continuous Monitoring and Human Oversight

Achieving a 15% bias reduction is not a one-time event; it requires ongoing vigilance. AI models are dynamic systems, and their performance, including fairness, can drift over time due to changes in data distributions or external factors.

Establishing robust monitoring frameworks and integrating human oversight are crucial for maintaining fairness and identifying new biases as they emerge. This continuous feedback loop is essential for sustainable ethical AI development.

Implementing Fairness Dashboards and Alerts

Automated dashboards can track fairness metrics in real-time, providing immediate visibility into potential issues. These dashboards should be customizable to display metrics relevant to your specific AI application and target groups.

Setting up alerts for significant deviations in fairness metrics can prompt timely investigation and intervention. This proactive approach helps prevent minor biases from escalating into major problems.

The Role of Human-in-the-Loop

While automation is powerful, human expertise remains indispensable. Human review of AI decisions, especially in critical applications, can catch subtle biases that automated metrics might miss.

Establishing diverse review panels can ensure a broader perspective on fairness and help identify biases that might be culturally or contextually specific. This collaborative approach combines the efficiency of AI with the nuanced understanding of human judgment.

Building an Ethical AI Culture and Governance

Achieving and sustaining a significant reduction in AI bias goes beyond technical solutions; it requires a deep cultural shift within an organization. Fostering an environment where ethical considerations are paramount is foundational for responsible AI.

Establishing clear governance structures and policies ensures that ethical AI principles are integrated into every stage of the development and deployment process, from conception to retirement.

Cross-functional Collaboration and Training

Ethical AI development is not solely the responsibility of data scientists or engineers. It requires collaboration across various departments, including legal, ethics, product, and business teams.

- Interdisciplinary Teams: Encourage diverse teams to contribute insights and perspectives on potential biases.

- Bias Awareness Training: Provide regular training on AI ethics and bias detection for all stakeholders involved in AI development.

- Ethical Guidelines: Develop and disseminate clear ethical guidelines that inform all AI-related decisions.

This collaborative approach ensures that ethical considerations are embedded from the initial stages of project planning, leading to more robust and trustworthy AI systems.

Establishing Clear AI Governance Policies

Formal AI governance policies provide the framework for responsible AI development and deployment. These policies should outline roles, responsibilities, and decision-making processes related to AI ethics.

Regular audits of AI systems for bias and adherence to ethical guidelines are crucial. Transparency in reporting and a commitment to continuous improvement are hallmarks of strong AI governance, ensuring long-term ethical AI development.

| Key Strategy | Brief Description |

|---|---|

| Data Pre-processing | Clean and balance datasets to remove inherent biases before model training. |

| Model Fairness Training | Adjust algorithms and incorporate fairness constraints during the model training phase. |

| Continuous Monitoring | Implement dashboards and human oversight to track and address bias drift in real-time. |

| Ethical Governance | Establish policies and foster a culture of ethical AI development across the organization. |

Frequently Asked Questions About AI Bias Reduction

AI bias refers to systematic errors in an AI system’s output that lead to unfair outcomes for certain groups. Addressing it is crucial for ensuring equitable treatment, maintaining public trust, and complying with ethical standards and regulations.

Complete elimination of AI bias is challenging due to inherent complexities in data and human decision-making. However, significant reduction is achievable through proactive strategies, continuous monitoring, and a commitment to ethical AI development practices.

Bias can be measured using various fairness metrics like demographic parity, equal opportunity, and predictive equality. These metrics assess disparities in model outcomes across different sensitive groups, providing a quantitative understanding of bias.

Data plays a fundamental role. Biased data leads to biased models. Strategies like representative sampling, data augmentation, and careful pre-processing are essential to ensure the data fed to AI models is as fair and balanced as possible.

Yes, a 15% reduction is a realistic and achievable goal with focused effort. By implementing targeted data-centric and model-centric strategies, coupled with continuous monitoring, organizations can see significant improvements in bias within a quarter.

Conclusion

The journey towards ethical AI development: reducing bias by 15% in your models within 3 months (practical solutions) is an ongoing commitment rather than a single destination. By systematically addressing bias at every stage, from meticulous data preparation and thoughtful algorithm design to continuous monitoring and robust governance, organizations can build AI systems that are not only powerful but also fair and equitable. Embracing these practical solutions will not only mitigate risks but also foster greater trust and unlock the full, positive potential of artificial intelligence for all.