Next-Gen AI: Unsupervised & Reinforcement Learning in 2025

The future of AI in 2025 is rapidly moving beyond supervised learning, embracing unsupervised and reinforcement learning to unlock unprecedented capabilities in data analysis, autonomous systems, and complex decision-making processes.

Beyond Supervised Learning: Exploring 3 Unsupervised and Reinforcement Learning Paradigms for Next-Gen AI in 2025

The landscape of artificial intelligence is undergoing a profound transformation, pushing the boundaries of what machines can learn and achieve. While supervised learning has driven much of AI’s success to date, the future, particularly by 2025, lies increasingly in paradigms that require less human intervention or learn through interaction. This article delves into the exciting realm of unsupervised reinforcement learning AI, examining three pivotal paradigms set to redefine next-generation AI.

The shift towards more autonomous learning methods is not merely an academic exercise; it represents a critical evolution in how AI systems can tackle real-world complexities. By moving past the need for vast, labeled datasets, these advanced paradigms promise greater adaptability, efficiency, and the ability to discover novel insights.

The Evolution from Supervised Learning: Why Now?

Supervised learning, for all its triumphs, fundamentally relies on human-labeled data. This dependency can be a significant bottleneck, especially in scenarios where data labeling is prohibitively expensive, time-consuming, or simply impossible. Think of emerging fields or highly dynamic environments where patterns change rapidly. The limitations of supervised learning become glaringly apparent.

As AI systems become more pervasive, the demand for models that can learn from raw, unstructured data or through trial and error has skyrocketed. This is driven by the sheer volume of unlabeled data generated daily, from sensor readings to social media feeds, and the need for AI to operate in complex, unpredictable environments without constant human guidance.

Data Scarcity and Cost

Obtaining and meticulously labeling large datasets is a monumental task. Many organizations face significant hurdles in creating the perfect dataset for their supervised learning models. This cost and time investment often limit the scope and scalability of AI applications.

- Reduced dependency on human annotators.

- Faster deployment of AI solutions in new domains.

- Lower operational costs for data preparation.

- Ability to leverage vast amounts of unlabeled data.

The transition away from absolute reliance on supervised methods is therefore a strategic imperative for AI development. It enables AI to unlock new frontiers where traditional approaches fall short, fostering innovation across industries.

The current trajectory suggests that by 2025, AI models will be far more adept at self-sufficiency, learning from their experiences and the inherent structure of data rather than solely from explicit instructions. This evolution signifies a maturation of the field, moving towards more intelligent and adaptable AI systems.

Unsupervised Learning: Discovering Hidden Patterns

Unsupervised learning stands in stark contrast to its supervised counterpart by operating without labeled data. Instead, it aims to uncover inherent structures, patterns, and relationships within data. This paradigm is particularly powerful for exploratory data analysis, anomaly detection, and data compression, where the ‘answers’ are not explicitly provided.

By allowing algorithms to find their own insights, unsupervised learning can reveal previously unknown categories or correlations that might be missed by human analysts. This makes it invaluable for large, complex datasets typical of modern AI challenges.

Clustering Algorithms: Grouping Similarities

Clustering is a foundational technique in unsupervised learning, where algorithms group data points into clusters such that items in the same cluster are more similar to each other than to those in other clusters. K-Means and hierarchical clustering are prime examples.

These algorithms are crucial for customer segmentation, identifying distinct biological populations, or organizing vast document collections without prior categorization. The beauty lies in their ability to perform this categorization organically.

- K-Means: Divides data into K clusters based on proximity to centroids.

- Hierarchical Clustering: Builds a tree of clusters, useful for understanding data structure at different granularities.

- DBSCAN: Identifies clusters based on density, effective for finding arbitrarily shaped clusters and outliers.

The insights gained from clustering can directly inform subsequent supervised learning tasks or provide standalone value for decision-making. Its role in preprocessing and understanding complex datasets cannot be overstated in the context of next-gen AI.

Another significant aspect of unsupervised learning is dimensionality reduction. Techniques like Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE) help simplify high-dimensional data while preserving its essential variance, making it easier to visualize and process. This is vital for managing the ever-increasing complexity of data in 2025.

Reinforcement Learning: Learning Through Interaction

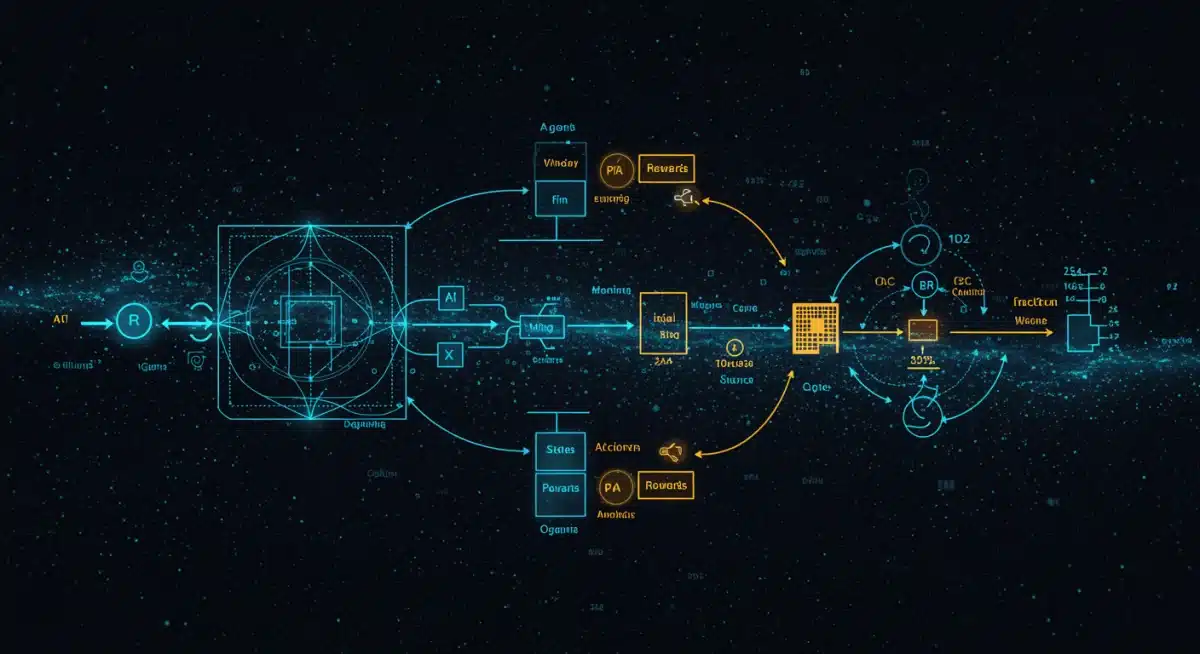

Reinforcement learning (RL) represents a fundamentally different approach, where an AI agent learns to make decisions by interacting with an environment. It’s akin to how humans and animals learn: through trial and error, receiving rewards for desirable actions and penalties for undesirable ones. The goal is to maximize cumulative reward over time.

This paradigm is exceptionally well-suited for dynamic environments, such as robotics, game playing, and autonomous navigation, where explicit programming for every possible scenario is impractical or impossible. RL allows agents to discover optimal strategies independently.

Agent, Environment, State, Action, Reward

Understanding RL requires grasping its core components. The agent performs actions within an environment. The environment, in turn, transitions to a new state and provides a reward (or penalty) to the agent. This feedback loop drives the learning process, allowing the agent to refine its policy – a mapping from states to actions.

The challenge lies in balancing exploration (trying new actions to discover better rewards) and exploitation (using known good actions to maximize immediate reward). This exploration-exploitation dilemma is central to designing effective RL algorithms.

- Agent: The learner or decision-maker.

- Environment: The world with which the agent interacts.

- State: The current situation of the agent in the environment.

- Action: A move made by the agent.

- Reward: Feedback from the environment, indicating the desirability of an action.

The power of RL lies in its ability to learn complex behaviors without explicit supervision, making it a cornerstone for developing truly autonomous and adaptive AI systems by 2025. Its application extends from optimizing industrial processes to creating intelligent personal assistants.

As AI systems become more integrated into our daily lives, particularly in autonomous vehicles and intelligent homes, reinforcement learning will play an increasingly critical role. Its ability to learn and adapt in real-time is unmatched by other paradigms.

Hybrid Approaches: Combining Strengths for Robust AI

While unsupervised and reinforcement learning offer significant advancements individually, their true potential often shines when combined, or when integrated with elements of supervised learning. These hybrid approaches leverage the strengths of each paradigm to create more robust, efficient, and intelligent AI systems. The complexity of real-world problems frequently demands such multifaceted solutions.

For instance, unsupervised learning can preprocess vast amounts of unlabeled data to extract meaningful features, which can then be used to train a reinforcement learning agent more efficiently. This reduces the burden on the RL agent to learn everything from scratch, speeding up convergence and improving performance.

Unsupervised Pre-training for Reinforcement Learning

One powerful hybrid strategy involves using unsupervised learning to pre-train components of an RL system. For example, autoencoders or generative adversarial networks (GANs) can learn rich, low-dimensional representations of high-dimensional observations (like images or raw sensor data). These compressed representations can then serve as inputs to an RL agent.

This pre-training helps the RL agent focus on learning optimal actions rather than simultaneously learning meaningful features from raw, noisy data. It’s a way to provide the agent with a more refined understanding of its environment before it even begins to learn complex behaviors.

- Reduces the dimensionality of input data for RL.

- Extracts salient features, making RL learning more efficient.

- Can handle environments with sparse rewards more effectively.

- Improves generalization capabilities of the RL agent.

Such hybrid models are becoming increasingly important in scenarios where data is abundant but labels are scarce, or where the environment is complex and requires both pattern recognition and strategic decision-making. This synergy is key to advanced AI in 2025.

Another compelling hybrid application is in inverse reinforcement learning, where the goal is to infer the reward function that an expert agent is optimizing, often from demonstrations. This combines elements of supervised learning (observing expert behavior) with reinforcement learning principles to understand underlying goals.

Self-Supervised Learning: The Best of Both Worlds

Self-supervised learning (SSL) is an exciting paradigm that sits at the intersection of supervised and unsupervised learning. It generates its own supervisory signals from the data itself, without requiring manual labels. This allows models to learn powerful representations from vast amounts of unlabeled data, much like unsupervised methods, but with a more defined learning objective similar to supervised tasks.

The core idea is to create a pretext task, where part of the input is used to predict another part, or where a transformation of the input is predicted. For example, predicting missing words in a sentence, predicting the rotation applied to an image, or predicting the next frame in a video sequence.

Pretext Tasks and Representation Learning

The success of SSL hinges on designing effective pretext tasks that force the model to learn semantically meaningful representations. These representations, often learned by large neural networks, can then be transferred to downstream tasks (like classification or object detection) with significantly less labeled data than traditional supervised approaches would require.

This makes SSL incredibly valuable for domains where labeled data is scarce but unlabeled data is plentiful, such as medical imaging or natural language processing. It allows AI models to build a foundational understanding of the data’s structure and content.

- Predicting missing parts of an input (e.g., masked language modeling).

- Predicting the relative position of patches in an image.

- Predicting the future context or transformation of data.

- Learning robust representations without human labels.

SSL has demonstrated remarkable success in fields like computer vision and natural language processing, where models like BERT and GPT have leveraged self-supervised pre-training to achieve state-of-the-art performance. This paradigm is set to revolutionize how AI models are trained and deployed by 2025, significantly reducing the reliance on costly human annotation.

The representations learned through self-supervision are often highly generalizable, meaning they can be effectively applied to a wide range of tasks with minimal fine-tuning. This efficiency and adaptability are crucial for developing versatile AI solutions.

Ethical Considerations and Future Outlook in 2025

As we delve deeper into advanced AI paradigms like unsupervised and reinforcement learning, it’s paramount to address the ethical implications and societal impact. The increased autonomy and complexity of these systems raise questions about bias, transparency, accountability, and control. By 2025, these considerations will be at the forefront of AI development and regulation.

Unsupervised learning, while powerful, can inadvertently perpetuate biases present in the unlabeled data it processes. If certain demographic groups are underrepresented or data reflects societal inequalities, the patterns discovered by unsupervised algorithms might reinforce these biases, leading to unfair or discriminatory outcomes.

Bias, Transparency, and Explainability

Reinforcement learning agents, learning from trial and error, might discover unexpected or opaque strategies to maximize rewards, making it difficult for humans to understand their decision-making process. This lack of transparency, often referred to as the ‘black box’ problem, poses significant challenges for accountability, especially in critical applications like autonomous vehicles or medical diagnosis.

Ensuring fairness, building explainable AI (XAI) models, and establishing robust governance frameworks are not just technical challenges but ethical imperatives. The future of AI hinges on our ability to develop these powerful systems responsibly.

- Developing methods to detect and mitigate bias in unsupervised models.

- Creating explainable AI techniques to understand RL agent decisions.

- Establishing regulatory frameworks for autonomous AI systems.

- Promoting human-in-the-loop approaches for critical AI applications.

The ongoing research in interpretable machine learning and AI ethics is crucial for navigating these challenges. By 2025, responsible AI development will be a key differentiator and a requirement for public trust and widespread adoption.

Furthermore, the increasing autonomy of RL agents in complex environments necessitates a robust discussion on control and safety. How do we ensure that AI systems, designed to optimize for a specific reward, do not inadvertently cause harm or pursue goals misaligned with human values? These are not trivial questions and require interdisciplinary collaboration to address effectively.

The Road Ahead: Integrating Advanced Learning for 2025

The journey beyond supervised learning is not just about adopting new algorithms; it’s about fundamentally rethinking how AI systems learn, adapt, and interact with the world. Unsupervised, reinforcement, and self-supervised learning, particularly when integrated into hybrid approaches, represent the vanguard of next-generation AI. By 2025, these methods will empower AI systems to learn more autonomously, discover deeper insights from complex data, and operate effectively in dynamic real-world environments.

By 2025, we anticipate a significant acceleration in the deployment of AI systems that leverage these advanced learning methods across various sectors. From personalized medicine to climate modeling, the ability of AI to learn from raw data and through dynamic interaction will unlock unprecedented opportunities.

Key Areas of Impact

The impact of these paradigms will be felt across numerous industries. In manufacturing, RL agents will optimize complex supply chains and robotic assembly lines. In healthcare, unsupervised learning will uncover subtle patterns in patient data for early disease detection, while SSL will aid in medical image analysis with minimal labeled data.

- Autonomous Systems: Enhanced navigation and decision-making for self-driving cars and drones.

- Drug Discovery: Accelerating the identification of new compounds and therapies through pattern recognition.

- Personalized Education: AI tutors adapting to individual learning styles and needs.

- Financial Modeling: Detecting anomalies and optimizing trading strategies in complex markets.

The integration of these advanced learning paradigms will not only lead to more efficient and effective AI solutions but also foster a deeper understanding of intelligence itself. The future of AI is not just about building smarter machines, but about building machines that can learn more like us, and perhaps even better, from the vast, unstructured tapestry of the world.

The continuous evolution of computational power, coupled with innovative algorithmic research, will continue to push the boundaries of what is possible. The coming years promise a fascinating period of discovery and application, fundamentally reshaping our technological landscape.

| Paradigm | Key Characteristic |

|---|---|

| Unsupervised Learning | Discovers hidden patterns and structures in unlabeled data without human guidance. |

| Reinforcement Learning | Learns optimal actions through trial and error by interacting with an environment to maximize rewards. |

| Self-Supervised Learning | Generates its own supervisory signals from data, learning robust representations without manual labels. |

| Hybrid Approaches | Combines strengths of different paradigms for more robust and efficient AI solutions. |

Frequently Asked Questions About Next-Gen AI

Supervised learning primarily relies on large, manually labeled datasets, which can be expensive and time-consuming to create. It struggles with novel data patterns not seen during training and is less adaptable to dynamic environments, limiting its scalability and application in data-scarce or rapidly evolving domains.

By 2025, unsupervised learning will be crucial for discovering hidden patterns in vast amounts of unlabeled data, enabling advanced anomaly detection, customer segmentation, and efficient data compression. It helps AI systems understand inherent data structures, which is vital for preprocessing and gaining insights from complex, real-world datasets.

Reinforcement learning is fundamental for autonomous systems as it allows AI agents to learn optimal decision-making strategies through interaction with their environment. This trial-and-error process, driven by rewards, is essential for developing self-driving cars, robotics, and other systems that must adapt to dynamic and unpredictable real-world scenarios.

Self-supervised learning generates its own supervisory signals from unlabeled data, allowing models to learn powerful data representations without manual annotation. It’s important because it significantly reduces reliance on costly labeled datasets, making AI more accessible and scalable, especially in fields like natural language processing and computer vision by 2025.

Ethical considerations for next-gen AI in 2025 include addressing potential biases embedded in training data, ensuring transparency in decision-making processes (explainable AI), and establishing accountability for autonomous systems. Developing robust governance and safety protocols is crucial to ensure responsible and beneficial AI deployment.

Conclusion

The evolution of AI beyond traditional supervised learning is not merely a technological advancement but a paradigm shift that promises to unlock unprecedented capabilities. Unsupervised, reinforcement, and self-supervised learning, particularly when integrated into hybrid approaches, represent the vanguard of next-generation AI. By 2025, these methods will empower AI systems to learn more autonomously, discover deeper insights from complex data, and operate effectively in dynamic real-world environments. While significant ethical challenges regarding bias, transparency, and control remain, concerted efforts in responsible AI development will pave the way for a future where AI systems are not only more intelligent but also more aligned with human values and societal good.